REQUEST COMPLIMENTARY SQLS*PLUS LICENCE

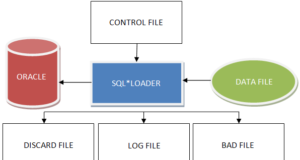

SQL*Loader: upload to Oracle database and conversion

In this article, we will talk about how to upload information to an Oracle database, namely, we will consider a utility to import data into an Oracle database called SQL*Loader.

Downloading and converting data

One of the most typical tasks of an Oracle database administrator (like any other) is to download data from external sources. Although it is usually performed during the initial filling of the database, it is often necessary to load data into different tables throughout the existence of the production database.

Previously, database administrators used to perform data loading from flat files to Oracle database tables only utility SQL*Loader.

Today, although the SQL*Loader utility still remains an important tool for loading data into Oracle databases, Oracle also offers another way to load tables, namely – recommends using the mechanism of external tables.

External tables use the functionality of SQL*Loader and allow you to perform complex transformations on them before loading data into the database. With their help, data can not only be loaded into the database but also uploaded to external files and then from these files to other Oracle databases.

In many cases, especially in data warehouses, the uploaded data needs to be converted. Oracle offers several tools for performing data conversion within a database, including SQL and PL/SQL technologies. For example, the powerful MODEL design allows you to create complex multidimensional arrays and perform complex calculations between strings and arrays using simple SQL code.

In addition, Oracle offers a useful data replication mechanism called Oracle Streams, which allows you to transfer changes from one database to another. This mechanism can be used for various purposes, including the maintenance of a backup database.

This chapter deals with all these issues related to the loading and conversion of data. But first, of course, it provides a brief overview of what the extraction, conversion, and loading process are all about.

A brief overview of the process of extracting, transforming, and loading data

Before launching any application in relation to Oracle Database, it must first be filled with data. One of the most typical data sources for database filling is a set of flat files from legacy systems or other sources.

Previously, the use of a standard or direct method of loading data using SQL*Plus was the only possible way to execute the loading of these data from external files to database tables. From the technical point of view, SQL*Loader still remains the main utility supplied by Oracle to perform data uploading from external files, but if you want you can also use the mechanism of external tables, which through the use of the SQL*Loader tool helps to access data contained in external data files.

Because the source data may contain excessive information or data in a format other than that required by the application, it is often necessary to convert it in some way before the database can use it. Conversion of data is a particularly common requirement for data warehouses that require data to be extracted from multiple sources. It is possible to perform a preliminary or basic conversion of source data when running the SQL*Loader utility itself.

However, complex data conversion requires separate steps, and there are several methods to manage this process. In most repositories, data goes through three main stages before it can be analyzed: extraction, transformation, and loading, all called the ETL process. Below is a description of what each of the steps represents.

- Extraction is the process of identifying and extracting source data, possibly in different formats, from multiple sources, not all of which may be relational databases.

- Data conversion is the most complex and time-consuming of these three processes and may involve applying complex rules to the data, as well as performing operations such as aggregation of data and applying different functions to them.

- Downloading is the process of data placement in database tables. It may also imply the execution of index maintenance and table-level restrictions.

Previously, organizations used two different methods to perform the ETL process: the method to perform a conversion and then download, and the method to perform the download and then conversion. The first method involves cleaning or transforming data before they are loaded into Oracle tables.

To perform this conversion, we usually use individually developed ETL processes. As for the second method, in most cases it does not take full advantage of Oracle’s built-in conversion capabilities; instead, it involves first loading data into intermediate tables and moving them to the final tables only after they have been converted inside the database itself.

Intermediate tables play a key role in this method. Its disadvantage is that tables have to support several types of data, some of which are in their original state, while others are already in the ready state.

Today, Oracle Database 11g offers simply stunning ETL capabilities, which allow you to load data into the database in a new way, namely, the method of performing their conversion during loading. By using the Oracle Database to perform all stages of ETL, all usually time-consuming ETL processes can be performed fairly easily. Oracle provides a whole set of auxiliary tools and technologies that reduce the time it takes to load data into the database, while also simplifying all related work. In particular, the ETL solution offered by Oracle includes the following components.

- External tables. External tables provide a way to combine loading and conversion processes. Their use will allow not to use cumbersome and time-consuming intermediate tables during data loading. They will be described in more detail later in this chapter, in the section “Using external tables to load data”.

- Multitable inserts. The multitable inserts mechanism allows you to insert data not into one table but into several tables at once using different criteria for different tables. It eliminates the need to perform an extra step such as dividing data into separate groups before loading them. It will be discussed in more detail later in this chapter, in the section “Using multiple table inserts”.

- Inserts and updates (upserts). This is a made-up name for a technology that allows you to either insert data into a table or just update rows with a single MERGE SQL statement. The MERGE operator will either insert new data or update rows if such data already exist in the table. It can make the loading process very easy because it eliminates the need to worry about whether the table already contains such data. It will be explained in more detail later in this chapter, under “Using the MERGE Operator”.

- Table functions. Tabular functions generate a set of rows as output. They return an instance of the collection type (i.e. a nested table or one of the VARRAY data types). They are similar to views, but instead of defining the conversion process in a declarative way in SQL, they mean its definition in a procedural way in PL/SQL. Tabular functions are very helpful when performing large and complex conversions, as they allow you to perform them before loading data into the data warehouse. They will be discussed in more detail later in this chapter, in the section “Using table functions to convert data”.

- Transportable tablespaces. These tablespaces provide an efficient and fast way to move data from one database to another. For example, they can be used to easily and quickly migrate data between an OLTP database and the data warehouse. We will talk more about them in new blog articles.

Just for the record! You can also use the Oracle Warehouse Builder (Oracle Data Storage Builder) tool or simply OWB to download data efficiently. This tool is a wizard-controlled tool for loading data into a database via SQL*Loader.

It allows loading data both from Oracle databases and from flat files. It also allows you to extract data from other databases such as Sybase, Informix, and Microsoft SQL Server through the Oracle Transparent Gateways mechanism. It combines the functions for ETL and design in a very user-friendly format.

In the next section, you will learn how you can use the SQL*Loader utility to download data from external files. It will also help you to understand how to use external tables to perform data uploading. After describing the mechanism of external tables, you will learn about the various methods offered in Oracle Database 11g for data conversion.

Using SQL*Loader utility

The SQL*Loader utility, which comes with the Oracle database server, is often used by database administrators to load external data into Oracle databases. It is an extremely powerful tool and is able to perform not only data uploading from text files. Below is a brief list of its other features.

- It allows you to convert data before or right during the upload itself (however, in a limited way).

- It allows downloading data from several types of sources: from disks, tapes, and named channels, as well as using multiple data files in the same download session.

- It allows downloading data over the network.

- It allows you to selectively upload data from the input file based on different conditions.

- It allows loading both the whole table and only a certain part of it, as well as to load data into several tables simultaneously.

- It allows you to perform simultaneous operations on data loading.

- It allows you to automate the process of loading so that it would be carried out at a scheduled time.

- It allows you to load complex object-relational data.

SQL*Loader utility can be used to perform data loading in several modes.

- In conventional data loading mode. In this mode, SQL*Loader reads several lines at a time and saves them in a bound array, and then inserts the entire array into the database at once and fixes the operation.

- In direct-path loading mode. In this mode, no INSERT SQL statement is used to load data into Oracle tables. Instead, column array structures are created from the data to be loaded, which are then used to format Oracle blocks of data, after which they are written directly to the database tables.

- In the external data loading mode. The new proposed Oracle mechanism of external tables is based on the use of SQL*Loader functionality and allows you to access the data contained in external files as if they were part of the database tables. When using the ORACLE_LOADER access driver to create an external table, in fact, the functionality of SQL*Loader is used. Oracle Database 11g still offers a new access driver ORACLE_DATAPUMP, which allows you to write to external tables.

The first two boot modes (or methods) have both their advantages and disadvantages. Due to the fact that the direct boot mode bypasses Oracle’s proposed SQL mechanism, it is much faster than the normal boot mode.

However, in terms of opportunities for data conversion, the normal mode is much superior to the direct mode, because it allows you to apply to the columns of the table during data loading a number of different functions. Therefore, Oracle recommends the use of the normal method of loading for loading small amounts of data, and straight – for loading large amounts of data.

Direct loading mode will be considered in more detail immediately after learning the basic functionality of SQL*Loader and ways to apply the usual method of loading. As for the external table mode, it will be discussed in more detail later in this chapter, in the section “Using external tables to load data”.

The process of loading data using the SQL*Loader utility includes two main steps.

- Selecting a data file that contains the data to be downloaded. Such a file usually has the extension .dat and contains the necessary data. These data can be in several formats.

- Create a control file. The control file specifies SQL*Loader how the data fields should be placed in the Oracle table and whether the data should be converted in any way. Such a file usually has the .ctl extension.

The control file will provide a scheme for displaying the columns of the table on the data fields in the input file. The availability of a separate data file to perform the upload is not a mandatory requirement at all. If desired, the data can be included in the control file itself, after specifying the information, concerning the loading process control, like the list of fields, etc.

These data can be provided either in the form of fields of fixed length or in a free format with the use of a special delimiter symbol, such as a comma (,) or a conveyor (|). Since the control file is so important, let us start with it.

Studying the SQL*Loader control file

The SQL*Loader control file is a simple text file that specifies important details of the loaded job, such as the location of the original data fall, as well as a scheme for displaying data in this source file on the columns in the target table.

It may also specify any conversion operations to be performed during the upload process, as well as the names of the log files to be used for uploading, and the names of the files to be used for intercepting incorrect and rejected data. In general, the SQL*Loader control file provides instructions on the following aspects:

- the source of the data to be uploaded to the database;

- the specification of the columns in the target table;

- the features of the formatting used in the input file;

- displaying the input file fields in the target table columns;

- data conversion rules (applying SQL functions);

- location of log files and error files.

Listing 13.1 gives an example of a typical SQL*Loader control file. The SQL*Loader utility considers the data rows contained in the source files to be recorded, and therefore the control file may still specify the format of the records.

Please note that a separate file for data is also allowed. In this example, however, the data to be loaded follows the control information directly, by using the INFILE * specification in the control file. This specification indicates that the data to be loaded will follow the control information.

At the performance of a single operation on the loading of the data, probably, it is better to act as easy as possible and place the data in the control file itself. The BEGINDATA keyword shows SQL*Loader, where the part of the control file containing the data begins.

LOAD DATA

INFILE *

BADFILE test.bad

DISCARDFILE test.dsc

INSERT

INTO TABLE tablename

FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY'"

(column1 POSITION (1:2) CHAR,

column2 POSITION (3:9) INTEGER EXTERNAL,

column3 POSITION (10:15) INTEGER EXTERNAL,

column4 POSITION (16:16) CHAR

)

BEGINDATA

AY3456789111111Y

/* Other data . . .*/

The part of the control file that describes the data fields is called the field list. In the control file, shown in Listing 13.1, this field list looks like this:

(column1 POSITION (1:2) char,

column2 POSITION (3:9) integer external,

column3 POSITION (10:15) integer external,

column4 POSITION (16:16) char

)

Here you can see that the list of fields lists the names of fields, their position, data type, separators, and any permissible conditions.

In the control file, you can specify many variables, which, roughly speaking, are divided into the following groups:

- constructions related to loading;

- constructions related to data files;

- constructions concerning the display of tables and fields;

- command-line parameters defined in the control file.

The following subsections describe in more detail all of these different types of parameters that can be set in the control file to configure data loading processes.

Tip. If you are not sure which parameters to use for an SQL*Loader session, you can simply enter them at the command line of the sqlldr operating system to see all available options. Running this command will display a list of all possible parameters and default values that are accepted for them in a particular operating system (if any).

Designs related to download

LOAD DATA keywords are at the very beginning of the control file and simply mean that you need to load data from the input file into Oracle tables using SQL*Loader.

The INTO TABLE design indicates which table the data needs to be loaded into. If you need to load data into several tables at once, you need to use one INTO TABLE structure for each table. Keywords INSERT, REPLACE, and APPEND indicate to the database how the data should be loaded.

If you use an INSERT construct, the table must be empty, otherwise, the loading process will generate an error or stop. The REPLACE design tells Oracle to truncate the table and start downloading new data. When performing a boot operation using the REPLACE parameter, it will often appear as if the table hangs first. In fact, at this time Oracle truncates the table before starting the download process. As for the APPEND design, it tells Oracle to add new rows to existing table data.

Constructions concerning data files

There are several designs that can be used to specify the location and other characteristics of a file or data files from which data is to be loaded via SQL*Loader. The following subsections describe some of the most important of these constructions.

Specification of the data file

The name and location of the input file is specified using the INFILE parameter:

INFILE='/a01/app/oracle/oradata/load/consumer.dat'

If you do not want to use the INFILE specification, you can include the data in the control file itself. If the data are included in the control file, instead of using a separate input file, the place of the file location is omitted and simply the symbol * is specified in its place:

INFILE *

If you choose to include data into the control file itself, you must use the BEGINDATA construction before starting the data:

BEGINDATA

Nicholas Alapati,243 New Highway,Irving,TX,75078

. . .

Physical and logical records

Each physical record in the source file is equivalent to a logical one by default, but if desired you can also specify in the control file that one logical record should include several physical records at once. For example, in the next input file, all three physical records shall also be considered three logical records by default:

Nicholas Alapati,243 New Highway,Irving,TX,75078

Shannon Wilson,1234 Elm Street,Fort Worth,TX,98765

Nina Alapati,2629 Skinner Drive,Flower Mound,TX,75028

To convert these three physical records, you can use either the CONCATENATE structure or the CONTINUEIF structure in the control file.

If the input data are in a fixed format, you can specify the number of lines to be read to form each logical record as follows:

CONCATENATE 4

Specifically, this CONCATENATE design determines that one logical record should be obtained by combining four lines of data. If each data line consists of 80 characters, then it turns out that the new logical record to be created will contain 320 characters. For this reason, when using the CONCATENATE construction, the record length construction (RECLEN) should also be defined together with it. In this case, this construction should look like this:

RECLEN 320

As for the CONTINUEIF design, it allows combining physical records into logical ones by specifying one or more characters in a particular place. For example:

CONTINUEIF THIS (1:4) = ‘next’

In this example, the CONTINUEIF design indicates that if four characters next are found at the beginning of a line, SQL*Loader should take all subsequent data as an extension of the previous line (four characters and the word next were chosen arbitrarily: any characters may act as continuation pointers).

In case of using fixed format data, the CONTINUEIF character can be placed in the last column as shown in the following example:

CONTINUEIF LAST = '&'

Here, the CONTINUEIF design determines that if an ampersand (&) character is found at the end of a line, the SQL*Loader utility should take the next line as an extension of the previous one.

Please note! Using both CONTINUEIF and CONCATENATE constructs will slow down SQL*Loader, so it is still better to display physical and logical records in a “one to one” scheme. This is because when combining multiple physical records to form a single logical SQL * Loader requires additional scanning of input data, which takes more time.

Record Format

You can apply one of the following three formats to your recordings.

- The stream format. This format is the most common and involves the use of a special ending symbol to indicate the end of the recording. When scanning an input file, the SQL*Loader utility knows that it has reached the end of a record when it comes across such a completion character. If no end character is specified, the default end character is either the newline character or the newline character (which in Windows must also be preceded by the carriage return character). In a set of three entries, which was given in the previous example, this is the format used.

- Variable format. This format implies an explicit indication of its length at the beginning of each record, as shown in the following example:

INFILE 'example1.dat' "var 2"

06sammyy12johnson,1234

This line contains two entries: the first six characters long (Sammy) and the second twelve characters long (johnson,1234). The var 2 design indicates to SQL*Loader that all data records are of variable size and that each new record is preceded by a 2 character long field.

- Fixed format. This format involves setting a specific fixed size for all records. Below is an example where it is specified that each record is 12 bytes long:

INFILE 'example1.dat' "fix 12".

sammyy,1234,johnso,1234

In this example, at first glance, it seems that the record includes the whole line (sammyy,1234, johnso,1234), but the design of fix 12 indicates that in fact, this line contains two whole records of 12 characters. Therefore, it turns out that if a fixed format is used in the source data file, it is allowed to have several entries on each line.

Descriptions concerning table and field comparison

During the loading session, SQL*Loader takes data fields from data records and converts them into table columns. It is in this process that the designs for mapping tables and fields help. With their help, the control file provides details about the fields, including column names, position, types of data contained in the input records, separators, and conversion parameters.

The column name in the table

Each column in the table is clearly defined with the position and type of data associated with the value of the corresponding field in the input file. It is not necessary to load all columns in the table with the values. If you skip any columns in the control file, the NULL value is automatically set for them.

Position

The SQL*Loader utility needs to somehow find out where in the input file there are different fields. The fields are called individual elements in the data file, and there is no direct match between these fields and the columns in the table where the data is loaded. The process of displaying the fields in the input data file on the columns of the table in the database is called field setting and takes the most time from the CPU during loading. The exact position of the various fields in the data record allows you to set the POSITION design. The position specified in this design can be relative or absolute.

By specifying a relative position, we mean the field position relative to the position of the previous field, as shown in the following example:

POSITION(*) NUMBER EXTERNAL 6

employee_name POSITION(*) CHAR 30

In this example, the POSITION design instructs SQL*Loader to first load the first employee_id field and then proceed to load the employee_name field, which starts at position 7 and is 30 characters long.

By specifying an absolute position is meant simply the position where each field begins and ends:

employee_id POSITION(1:6) INTEGER EXTERNAL

employee_name POSITION(7:36) CHAR

Data Types

The types of data used in the management file concern only the input records and those associated with the columns in the database tables do not match. The four main data types that can be used in the SQL*Loader control file are listed below:

INTEGER(n) is a binary integer where n can be 1, 2, 4 or 8.

SMALLINT

CHAR

INTEGER EXTERNAL

FLOAT EXTERNAL

DECIMAL EXTERNAL

Separators

After specifying the data types, you can specify a delimiter, which should be used to separate fields. It can be defined using either the TERMINATED BY construction or the ENCLOSED BY construction.

The TERMINATED BY construction restricts the field to the specified symbol and indicates the end of the field. Examples are given below:

BY WHITESPACE

BY ","

In the first example, the design of TERMINATED BY indicates that the end of the field should be the first character to meet the space character, and in the second example, that a comma should be used to separate the fields.

The design of ENCLOSED BY ” ” indicates that a pair of double quotes should act as the field separating character. Below is an example of how to use this construction:

FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"

Council. In Oracle, it is recommended to avoid using separators and to specify the field positions wherever possible (using the POSITION parameter). Specifying field positions saves the database the need to scan the data file and find the selected delimiters there, thus reducing the time spent on its processing.

Data conversion parameters

If you wish, you can make sure that before loading field data into table columns, SQL-functions are applied to them. Generally, only SQL functions that return single values can be used to convert field values. A field within an SQL string needs to be referenced using the field name_taxis.

The SQL function or functions themselves must be specified after the data type associated with the field and enclosed in double-quotes, as shown in the following examples:

field_name CHAR TERMINATED BY "," "SUBSTR(:field_name, 1, 10)".

employee_name POSITION 32-62 CHAR "UPPER(:ename)"

salary position 75 CHAR "TO_NUMBER(:sal, '$99,999.99')"

commission INTEGER EXTERNAL "":commission * 100"

As it is not difficult to notice, the application of SQL-operations and functions to the values of fields before they are loaded into tables helps to convert data right during their loading.

Command-line parameters specified in the control file

The SQL*Loader utility allows you to specify the number of runtime parameters in the command line when calling its executable file. Parameters, the values of which should remain the same for all jobs, are usually set in a separate parameter file, allowing you to further use the command line just to run SQL*Loader jobs, both interactively and as scheduled batch jobs.

The command line specifies only those parameters that are specific to the execution time, along with the name and location of the control file.

Alternatively, the runtime parameters can also be specified within the control file itself using the OPTIONS design. Yes, the runtime parameters can always be specified when calling SQL*Loader, but if they are frequently repeated, it is still better to specify them in the control file using the OPTIONS design.

Using the OPTIONS construct is especially convenient when the length of information to be specified in the SQL*Loader command line is so large that it exceeds the maximum command-line size accepted in the operating system.

For your information! Setting a parameter on the command line will override the values that were specified for it in the control file.

The following subsections describe some of the most important parameters that can be set in the control file by the OPTIONS structure.

Parameter USERID

The USERID parameter enables you to specify the name and password of the user in the database who has the privileges required for downloading data:

USERID = samalapati/sammyy1

CONTROL parameter

The CONTROL parameter allows you to specify the name of the control file to be used for the SQL*Loader session. This control file may include the specifications of all loading parameters. Of course, it is also possible to load data using manually entered commands, but the use of the control file provides more flexibility and allows you to automate the loading process.

CONTROL = '/test01/app/oracle/oradata/load/finance.ctl'

DATA parameter

The DATA parameter allows you to specify the name of the input data file, which should be used to load data. By default, the name of such a file always ends with the .dat extension. Please note that the data to be loaded need not be inside a separate data file. If desired, they may also be included in the control file, right after the details concerning the loading process itself.

DATA = '/test02/appacle/oradata/load/finance.dat'

BINDSIZE and ROWS parameters

The BINDSIZE and ROWS parameters allow you to specify the size that the binding array should have in normal boot mode. When loading in normal mode, SQL*Loader utility does not insert data into the table row by row. Instead, it inserts an entire set of rows into the table at once; this set of rows is called a bound array, and either the BINDSIZE or ROWS parameter is responsible for its size.

The BINDSIZE parameter specifies the binding array size in bytes. In the author’s system, this size was 256 000 bytes by default.

BINDSIZE = 512000

As for the ROWS parameter, it does not impose any restrictions on the number of bytes in the binding array. Instead, it imposes a limit on the number of rows that can be contained in each binding array; SQL*Loader multiplies this value in the ROWS parameter by the calculated size of each row in the table. In the author’s system, the number of rows in the ROWS parameter was 64 by default.

ROWS = 64000

Just for the record! When setting values for both BINDSIZE and ROWS, the SQL*Loader utility uses the lesser of these two values for the binding array.

The DIRECT parameter

If DIRECT is set to true (DIRECT=true), the SQL*Loader utility uses the direct boot mode rather than the normal one. The default value for this parameter is false (DIRECT=false), meaning that the normal boot mode should be used by default.

The ERRORS parameter

The ERRORS parameter allows you to specify how many errors can occur before the SQL*Loader job must be completed. In most systems, this parameter is set to 50 by default. If you do not want to tolerate any errors, you can set this parameter to 0:

ERRORS = 0

LOAD parameter

With the LOAD parameter, you can specify how many maximum logical records are allowed to be loaded into the table. By default, you can load all records that are contained in the input data file.

LOAD = 10000

LOG parameter

The LOG parameter allows you to specify the name of the log file that the SQL*Loader utility should use during the boot process. The log file, which will be shown later, provides a lot of useful information about the SQL*Loader session.

LOG = '/u01/app/oracle/admin/finance/logs/financeload.log'

BAD parameter

The BAD parameter allows you to specify the name and location of a bad file. In case some records are rejected due to data formatting errors, the SQL*Loader utility will write all these records into the mentioned file. For example, the size of a field may exceed the length specified for it and, as a consequence, be rejected by the SQL*Loader utility.

Note that the records may be rejected not only by the SQL*Loader utility but also by the database itself. For example, when trying to insert lines with duplicate values of primary keys, the database will reject their insertion. Such entries shall also be placed in the wrong entry file. If the name of the invalid record file is not explicitly specified, Oracle shall create such a file automatically and use the default name with the name of the management file as the prefix.

BAD = '/u01/app/oracle/load/financeload.bad'

SILENT parameter

By default, SQL*Loader displays response messages on the screen and thus informs you about the loading process. You can turn off the display of these messages using the SILENT parameter if you wish. Several values can be set for this parameter. For example, you can turn off the display of all types of messages by setting it to ALL:

SILENT = ALL

DISCARD and DISCARDMAX parameters

All records that are rejected at boot time because they do not meet the selection criteria specified in the management file are placed in the discard file. By default, this file is not created. Oracle will create it only if there are rejected records, and even then only if it was explicitly specified in the control file. The DISCARD parameter, for example, is used to specify the name and location of the rejected records file in the control file:

DISCARD = 'test01/appacle/oradata/load/finance.dsc'

By default, SQL*Loader does not impose any restrictions on the number of records; therefore, all logical records can be rejected. With the parameter DISCARDMAX, however, you can take and limit the number of rejected records.

Tip. All records are placed in their original format in both the wrong and rejected records files. This makes it easy, especially when loading large amounts of data, to edit these files properly and use them to reload data that could not be downloaded during the initial upload session.

PARALLEL option

The PARALLEL parameter allows you to specify whether SQL*Loader is allowed to run several parallel sessions when loading in direct mode:

sqlldr USERID=salapati/sammyy1 CONTROL=load1.ctl DIRECT=true PARALLEL=true

Parameter RESUMABLE

With the RESUMABLE parameter, you can enable the Resumable Space Allocation function offered by Oracle (resume operation after the problem of space allocation is eliminated). If this feature is enabled, when a space problem occurs at the time of loading, the task will simply be paused, and the administrator will then be notified and will be able to allocate more space so that the task can continue without problems.

The Resumable Space Allocation feature is described in more detail in Chapter 8. By default, RESUMABLE is set to false, which means that Resumable Space Allocation is disabled. To enable it, simply set it to true (RESUMABLE=true).

The parameter RESUMABLE_NAME

The RESUMABLE_NAME parameter allows you to specify a specific boot job that should be renewable when using the Resumable Space Allocation function. By default, the value to be set for it is formed by combining the user name, session identifier, and instance identifier.

RESUMABLE_NAME = finance1_load

Parameter RESUMABLE_TIMEOUT

The RESUMABLE_TIMEOUT parameter can only be set to true if the RESUMABLE parameter is set to true. It allows you to define the timeout, i.e. the maximum time for which the operation can be postponed in case of a collision with a space-related problem. If the problem cannot be solved within this time, the operation will be interrupted. By default, this timeout is 7,200 seconds.

RESUMABLE_TIMEOUT = 3600

SKIP parameter

The SKIP parameter is very convenient to use in situations when SQL*Loader interrupts job execution due to some errors, but already has time to fix some lines. It allows you to skip a certain number of lines in the input file when executing SQL*Loader job for the second time. An alternative is to truncate the table and restart SQL*Loader job from the very beginning, which is not very convenient if a decent number of rows have already been loaded into the database tables.

SKIP = 235550

In this example, it is assumed that the first time the job was interrupted after the successful loading of 235 549 lines. This information can be obtained either by looking at the log file used during this upload session or by performing a query directly to the table itself.

Generation of data during download

SQL*Loader utility allows generating data for loading columns. This means that you can load data without using any data file. Most often, however, data is only generated for one or more columns when performing a general loading from a data file. Below is a list of the data types that SQL*Loader can generate.

Constant value. Using the CONSTANT design, you can set a column to a constant value. For example, in the following example this construction indicates that all rows to be filled during a given session must have sysadm value loaded_by in the column:

loaded_by CONSTANT "sysadm"

The value of the expression (value). With the EXPRESSION design, you can set the column value of an SQL operation or a PL/SQL function as shown below:

column_name EXPRESSION "SQL string"

Datafile record number. Using the RECNUM construction, it is possible to set the column record number, which led to the loading of this row, as a value:

record_num RECNUM

System date. The sysdate variable can be used to set the date of data downloads for a column as a value:

loaded_date sysdate

Sequence (sequence). With the SEQUENCE function you can generate unique values for column loading. In the following example, this function indicates that the current maximum loadseq sequence value should be used and that this value should be increased by one every time a row is inserted:

loadseq SEQUENCE(max,1)

Call SQL*Loader

There are several ways to call SQL*Plus utility. The standard syntax for calling SQL*Loader looks like this:

SQLLDR keyword=value [,keyword_word=value,...]

Below is an example of how to call SQL*Loader:

$ sqlldr USERID=nicholas/nicholas1 CONTROL=/u01/app/oracle/finance/finance.ctl \

DATA=/u01/app/oracle/oradata/load/finance.dat \

LOG=/u01/aapp/oracle/finance/log/finance.log \

ERRORS=0 DIRECT=true SKIP=235550 RESUMABLE=true RESUMABLE_TIMEOUT=7200

Just for the record! When calling the SQL*Loader utility from the command line, the backslash character (\) at the end of each line means that the command continues on the next line. You can specify command-line parameters by specifying their names as well as their positions.

For example, the parameter responsible for username and password always follows the keyword sqlldr. If the parameter is skipped, Oracle will use the default value for this parameter. If you wish, you can add a comma after each parameter.

It is not difficult to notice that the more parameters you need to use, the more information you have to provide in the command line. This approach has two drawbacks. First, there is confusion when misprints or other errors are made. Secondly, some operating systems may have a limit on the number of characters that can be entered at the command line. Fortunately, the same task can also be started with the following command, which is much less complicated:

$ sqlldr PARFILE=/u01/app/oracle/admin/finance/load/finance.par

PARFILE represents a parameter file, i.e. a file that can contain values for all command parameters. For example, for the load specifications shown in this chapter, this file looks like this:

USERID=nicholas/nicholas1

CONTROL='/u01/app/oracle/admin/finance/finance.ctl'

DATA='/app/oracle/oradata/load/finance.dat'

LOG='/u01/aapp/oracle/admin/finance/log/finance.log'

ERRORS=0

DIRECT=true

SKIP=235550

RESUMABLE=true

RESUMABLE_TIMEOUT=7200

Using the parameter file is a more elegant approach than entering all the parameters in the command line, and also more logical when you need to regularly perform tasks with the same parameters. Any option that is specified on the command line will override the value that was set for that parameter within the parameter file.

If you want to use the command line, but exclude the possibility of someone peeping at the password being entered, you can call SQL*Loader in the following way:

$ sqlldr CONTROL=control.ctl

In this case, SQL*Loader will display a prompt to enter a username and password.

SQL*Loader log file

The log file of the SQL*Loader utility contains a lot of information about its work session. It tells you how many records should have been loaded and how many were actually loaded, as well as what records could not be loaded and why. In addition, it describes the columns that were specified for the fields in the SQL*Loader control file. Listing 13.2 gives an example of a typical SQL*Loader log file.

SQL*Loader: Release 11.1.0.0.0 - Production on Sun Aug 24 14:04:26 2008

Control File: /u01/app/oracle/admin/fnfactsp/load/test.ctl

Data File: /u01/app/oracle/admin/fnfactsp/load/test.ctl

Bad File: /u01/app/oracle/admin/fnfactsp/load/test.badl

Discard File: none specified

(Allow all discards)

Number to load: ALL

Number to skip: 0

Errors allowed: 0

Bind array: 64 rows, max of 65536 bytes

Continuation: none specified

Path used: Conventional

Table TBLSTAGE1, loaded when ACTIVITY_TYPE != 0X48(character 'H')

and ACTIVITY_TYPE != 0X54(character 'T')

Insert option in effect for this table: APPEND

TRAILING NULLCOLS option in effect

Column Name Position Len Term Encl Datatype

----------------------- -------- ----- ---- ---- ---------

COUNCIL_NUMBER FIRST * , CHARACTER

COMPANY NEXT * , CHARACTER

ACTIVITY_TYPE NEXT * , CHARACTER

RECORD_NUMBER NEXT * , CHARACTER

FUND_NUMBER NEXT * , CHARACTER

BASE_ACCOUNT_NMBER NEXT * , CHARACTER

FUNCTIONAL_CODE NEXT * , CHARACTER

DEFERRED_STATUS NEXT * , CHARACTER

CLASS NEXT * , CHARACTER

UPDATE_DATE SYSDATE

UPDATED_BY CONSTANT

Value is 'sysadm'

BATCH_LOADED_BY CONSTANT

Value is 'sysadm'

/* Discarded Records Section: Gives the complete list of discarded

records, including reasons why they were discarded.*/

/* Rejected records section: contains a complete list of rejected records

along with a description of the reasons why they were rejected.

Record 1: Discarded - failed all WHEN clauses.

Record 1527: Discarded - failed all WHEN clauses.

Table TBLSTAGE1:

/* Number of Rows: Gives the number of rows successfully loaded and the number of

The rows are not loaded due to or because they failed the WHEN conditions, if

any. Here, two records failed the WHEN condition*/

/* Section number of rows: shows how many rows were successfully loaded

and how much was not loaded due to errors or failure to meet WHEN conditions,

if any. */

1525 Rows successfully loaded.

0 Rows not loaded due to data.

2 Rows not loaded because all WHEN clauses were failed.

0 Rows not loaded because all fields were null.

/* Memory Section: Gives the bind array size chosen for the data load*/.

/* Memory partition: shows what size array was selected for data download*/.

Space for bind array: 99072 bytes(64 rows)

Read buffer bytes: 1048576

/* Logical Records Section: Gives the total records, number of rejected

and discarded records.*/

/* Boolean records section: shows how many total logical records

was skipped, read, rejected and rejected.

Total logical records skipped: 0

Total logical records read: 1527

Total logical records rejected: 0

Total logical records discarded: 2

/*Date Section: Gives the day and date of the data load.*/

/*Date section: Gives the date and date of the data load.*/

Run started on Sun Mar 06 14:04:26 2009

Run ended on Sun Mar 06 14:04:27 2009

/*Time section: Gives the time taken to complete the data load.*/

/*Time section: Gives the time taken to complete the data load.*/

Elapsed time was: 00:00:01.01

CPU time was: 00:00:00:00.27

When studying the journal file, the main attention should be paid to how many logical records were read and which records were missed, rejected, or rejected. In case you encounter any difficulties while performing the task, the journal file is the first place you should look to find out whether the data records are being loaded or not.

Using exit codes

The log file records a lot of information about the boot process, but Oracle also allows you to capture the exit code after each load. This approach provides an opportunity to check the results of loading when it is executed by a cron job or a shell script. If you use a Windows server to schedule boot jobs, you can use the at command. The following are the key exit codes that can be found on UNIX and Linux operating systems:

- EX_SUCC 0 means that all lines were loaded successfully;

- EX_FAIL 1 indicates that some errors have been detected in the command line or syntax;

- EX_WARN 2 means that some or all lines have been rejected;

- EX_FTL 3 indicates that some errors have occurred in the operating system.

Using the boot method in direct mode

So far, the SQL*Loader utility has been considered in terms of normal boot mode. As we remember, the normal boot mode method involves using INSERT SQL statements to insert data into tables in the size of one binding array at a time.

The method of loading in the direct mode does not involve the use of SQL-operators to place data in tables, instead, it involves formatting the Oracle data blocks and writing them directly to database files. This direct writing process eliminates most of the overheads that occur when executing SQL statements to load tables.

Since the direct loading method does not involve a struggle for database resources, it will work much faster than the normal loading method. For loading large amounts of data, the direct mode of loading is the most suitable and maybe the only effective method for the simple reason that the execution of loading in normal mode will require more time than available.

In addition to the obvious advantage of reducing the loading time, the direct mode still allows you to rebuild the indexes and perform pre-sorting of data. In particular, it has such advantages in comparison with the normal mode of downloading.

- Loading is much faster than in the normal mode of loading because SQL-operators are not used.

- To perform data recording to the database, multi-block asynchronous I / O operations are used, so the recording is fast.

- There is an option to perform the pre-sorting of data using effective sorting subroutines.

- By setting UNRECOVERABLE to Y (UNRECOVERABLE=Y), it is possible to prevent any re-write data from happening during loading.

- By using the temporary storage mechanism, index building can be done more efficiently than by using the normal boot mode.

For your information! Normal boot mode will always generate rerun records, while direct boot mode will only generate such records under certain conditions. In addition, indirect mode, insertion triggers will not be triggered, which in normal mode are always triggered during the boot process. Finally, in contrast to the normal mode, the direct mode will exclude the possibility of users making any changes to the table to be downloaded with data.

Despite all the above, the method of performing the download in direct mode also has some serious limitations. In particular, it cannot be used under the following conditions:

- when using clustered tables;

- when loading data into parent and child tables simultaneously;

- when loading data into VARRAY or BFILE columns;

- when loading among heterogeneous platforms using Oracle Net;

- if you want to use SQL-function during loading.

Just for the record! You cannot use any SQL-functions in the direct boot mode. If you need to load large amounts of data and also convert them during the loading process, it can lead to problems.

Normal boot mode will allow you to use SQL functions to convert data, but it is very slow compared to direct mode. Therefore, to perform the loading of large amounts of data, it may be preferable to use newer technologies for loading and converting data, such as external tables or table functions.

Parameters that can be applied when using the download method in direct mode

Several parameters in SQL*Loader are specifically designed to be used with the direct mode boot method or more suitable for this method than the normal mode boot method. These parameters are described below.

- DIRECT. If you want to use the direct mode boot method, you must set DIRECT to true (DIRECT=true).

- DATA_CACHE. The parameter DATA_CACHE is convenient to use in case of repeated loading of the same data or values of date and time (TIMESTAMP) during loading in the direct mode. The SQL*Loader utility has to convert date and time data every time it encounters them. Therefore, if there are duplicate date and time values in the downloaded data, setting DATA_CACHE will reduce the number of unnecessary operations to convert these values and thus reduce the processing time. By default, the DATA_CACHE parameter allows saving 1000 values in the cache. If there are no duplicate date and time values in the data, or if there are very few, this parameter can be disabled at all by setting it to 0 (DATA_CACHE=0).

- ROWS. The ROWS parameter is important because it allows you to specify how many rows the SQL*Loader utility should read from the input data file before saving inserts to tables. It is used to define the upper limit of the amount of data lost in case of an instance failure during a long SQL*Loader job. After reading the number of rows specified in this parameter, SQL*Loader will stop loading data until the contents of all data buffers are successfully written to the data files. This process is called data saving. For example, if SQL*Loader can load about 10,000 lines per minute, setting the ROWS parameter to 150,000 (ROWS=150000) will cause data to be saved every 15 minutes.

- UNRECOVERABLE. The UNRECOVERABLE parameter minimizes the use of the rerun log during data loading in the direct mode (it is defined in the control file).

- SKIP_INDEX_MAINTENANCE. The SKIP_INDEX_MAINTENANCE parameter, when enabled (SKIP_INDEX_MAINTENANCE=true), tells SQL*Loader not to worry about index maintenance during loading. It is set to false by default.

- SKIP_UNUSABLE_INDEXES. Setting it to true for SKIP_UNUSABLE_INDEXES will ensure that SQL*Loader boots even tables whose indexes are in an unusable state. SQL*Loader will be serviced, however, these indexes will not be used. The default value for this parameter depends on which value is selected for the SKIP_UNUSABLE_INDEXES initialization parameter, which is set to true by default.

- SORTED_INDEXES. The SORTED_INDEXES parameter notifies SQL*Loader that data has been sorted at the level of certain indices, which helps to speed up the loading process.

- COLUMNARRAYROWS. This parameter allows you to specify how many lines should be loaded before building the thread buffer. For example, if you set it to 100 000 (COLUMNARRAYROWS=100000), 100 000 lines will be loaded first. Therefore, it turns out that the size of the array of columns during loading in the direct mode will depend on the value of this parameter. The default value of this parameter was 5000 rows for the author on a UNIX server.

- STREAMSIZE. The STREAMSIZE parameter allows setting the flow buffer size. For the author on a UNIX server, for example, this size was 256 000 lines by default; if you want to increase it, you could set the STREAMSIZE parameter, for example, STREAMSIZE=51200.

- MULTITHREADING. When the MULTITHREADING parameter is enabled, operations to convert column arrays to thread buffers and then load these thread buffers are executed in parallel. On machines with several CPUs this parameter is enabled by default (set to true). If you want, you can turn it off by setting it to false (MULTITHREADING =false).

Control of restrictions and triggers when using the boot method in direct mode

The method of loading in the direct mode implies inserting data directly into data files by formatting data blocks. Since INSERT operators are not used, there is no systematic application of table restrictions and triggers in the direct loading mode. Instead, all triggers are disabled, as well as some integrity constraints.

The SQL*Loader utility automatically disables all external keys and check integrity restrictions, but it still supports non-zero, unique, and associated with primary keys. When a job is finished, SQL*Loader automatically turns on all disabled constraints again if the REENABLE design was specified. Otherwise, you will need to enable them manually. As for triggers, they are always automatically re-enabled after the boot process has finished.

Tips for optimal use of SQL*Loader

To use SQL*Loader in an optimal way, especially when loading large amounts of data and/or having multiple indexes and restrictions associated with tables in a database, it is recommended to do the following.

- Try to apply the boot method in direct mode as often as possible. It works much faster than the boot method in normal mode.

- Use it wherever possible (with direct boot mode), the UNRECOVERABLE=true option. This will save a decent amount of time because new downloadable data will not need to be fixed in a rerun log file. The ability to perform media recovery still remains valid for all other database users, plus a new SQL*Loader session can always be started if a problem occurs.

- Minimize the use of the NULLIF and DEFAULTIF parameters. These constructs must always be tested on every line for which they are applied.

- Limit the number of data type and character set conversion operations because they slow down the processing.

- Wherever possible, use positions rather than separators for the fields. The SQL*Loader utility is much faster to move from field to field when their positions are provided.

- Display physical and logical records in a one-to-one manner.

- Disable the restrictions before starting the boot process because they will slow it down. Of course, when you turn on the restrictions again sometimes errors may appear, but a much faster execution of the data loading is worth it, especially in the case of large tables.

- Specify the SORTED_INDEXES design in case you use the direct loading method to optimize the speed of loading.

- Delete indexes associated with tables before starting the loading process in case of large data volumes being loaded. If it is impossible to remove indexes, you can make them unusable and use SKIP_UNUSABLE_INDEXES construction during loading, and SKIP_INDEX_MAINTENANCE construction during loading.

Some useful tricks for loading data using SQL*Loader

Using SQL*Loader is an effective approach, but not without its share of tricks. This section describes how to perform some special types of operations while loading data.

Using WHEN structure during data upload operations

The WHEN construct can be used during data upload operations to limit uploaded data to only those strings that meet certain conditions. For example, it can be used to select from a data file only those records that contain a field that meets specific criteria. Below is an example demonstrating the application of the WHEN constructs in the SQL*Loader control file:

LOAD DATA

INFILE *

INTO TABLE stagetbl

APPEND

WHEN (activity_type <>'H') and (activity_type <>'T')

FIELDS TERMINATED BY ','

TRAILING NULLCOLS

/* Here are the columns of the table... */

BEGINDATA

/* Here comes the data...*/

Here, the condition in the WHEN construct specifies that all entries in which the field corresponding to the activity_type column in the stagetbl table do not contain either H or T must be rejected.

Loading the username in the table

You can use a pseudo-variable user to insert a username into a table during the boot process. Below is an example to illustrate how to use this variable. Note that the stagetb1 target table must necessarily contain a column named loaded_by in order for the SQL*Loader utility to be able to insert the username into it.

LOAD DATA

INFILE *

INTO TABLE stagetbl

INSERT

(loaded_by "USER")

/* Here are the columns of the table, and then the data itself...

Loading large data fields into a table

When trying to load into a table any field larger than 255 bytes, even if the VARCHAR(2000) or CLOB type column is assigned, the SQL*Loader utility will not be able to load data, and therefore it will generate an error message Field in data file exceeds the maximum length.

To load a large field, it is necessary to specify in the control file the size of the corresponding column in the table when displaying the columns of the table on the data fields, as shown in the following example (where the corresponding column has the name text):

LOAD DATA

INFILE '/u01/app/oracle/oradata/load/testload.txt'

INSERT INTO TABLE test123

FIELDS TERMINATED BY ','

(text CHAR(2000))

Loading the sequence number in the table

Suppose there is a sequence named test_seq and it is required to increase its number when loading each new data record into the table. This behavior can be ensured in the following way:

LOAD DATA

INFILE '/u01/app/oracle/oradata/load/testload.txt'

INSERT INTO TABLE test123

(test_seq.nextval, . .)

Loading data from a table into an ASCII file

Sometimes it is necessary to extract data from the database table into flat files, for example, to use them to upload data to Oracle tables located elsewhere. If you have a lot of such tables, you can write complex scripts, but if you are talking about just a few tables, the following simple method of data extraction using SQL*Plus commands is also quite suitable:

SET TERMOUT OFF

SET PAGESIZE 0

SET ECHO OFF

SET FEED OFF

SET HEAD OFF

SET LINESIZE 100

COLUMN customer_id FORMAT 999,999

COLUMN first_name FORMAT a15

COLUMN last_name FORMAT a25

SPOOL test.txt

SELECT customer_id,first_name,last_name FROM customer;

SPOOL OFF

You can also use UTL_FILE package to upload data to text files.

Deletion of indexes before loading large data arrays

There are two main reasons why you should seriously consider deleting the indexes associated with a large table before loading data in direct mode using the NOLOGGING option. First, loading together with the indexes supplied with the table data may take more time. Secondly, if the indexes are left active, changes in their structure during loading will generate redo records.

Tip. When choosing the option to load data using the NOLOGGING option, a decent amount of redo records will be generated to indicate the changes made to the indexes. In addition, some more redo data will be generated to support the data dictionary, even during the data loading operation itself with option NOLOGGING. Therefore, the best strategy in this case is to delete the indexes and recreate them after creating the tables.

When loading in the direct mode, somewhere on the halfway point, an instance may crash, the space required by SQL*Loader utility to perform index update may run out, or duplicate index key values may occur. All such situations are referred to as the condition of bringing the indexes to an unusable state because after an instance is restored, the indexes become unusable. To avoid these situations, it may also be better to create indexes after the boot process is finished.

Executing data loading in several tables

You can use the same SQL*Loader utility to load data into several tables. Here is an example of how to load data into two tables at once:

LOAD DATA

INFILE *

INSERT

INTO TABLE dept

WHEN recid = 1

(recid FILLER POSITION(1:1) INTEGER EXTERNAL,

POSITION(3:4) INTEGER EXTERNAL,

dname POSITION(8:21) CHAR)

INTO TABLE emp

WHEN recid <> 1

(recid FILLER POSITION(1:1) INTEGER EXTERNAL,

POSITION(3:6) INTEGER EXTERNAL,

ename POSITION(8:17) CHAR,

POSITION(19:20) INTEGER EXTERNAL)

In this example, data from the same data file is loaded simultaneously into two tables – dept and emp – based on whether the record field contains the value of 1 or not.

SQL*Loader error code interception

Below is a simple example of how you can intercept SQL*Loader error codes:

$ sqlldr PARFILE=test.par

retcode=$?

if [[retcode !=2 ]]].

then .

mv ${ImpDir}/${Fil} ${InvalidLoadDir}/.${Dstamp}.${Fil}

writeLog $func "Load Error" "load error:${retcode} on file ${Fil}".

else

sqlplus / ___EOF

/* Here you can place any SQL-operators for data processing,

that were uploaded successfully */

___EOF

Downloading XML data into Oracle XML database

The SQL*Loader utility supports using the XML data type for columns. If there is such a column, it can therefore be used to load XML data into the table. SQL*Loader takes XML columns as CLOB (Character Large Object) columns.

In addition, Oracle allows you to load XML data both from the primary data file and from an external LOB file (A large Object is a large object), and use both fixed-length and separator fields, as well as read all the contents of the file into a single LOB field.

MORE NEWS

PreambleNoSql is not a replacement for SQL databases but is a valid alternative for many situations where standard SQL is not the best approach for...

PreambleMongoDB Conditional operators specify a condition to which the value of the document field shall correspond.Comparison Query Operators $eq...

5 Database management trends impacting database administrationIn the realm of database management systems, moreover half (52%) of your competitors feel...

The data type is defined as the type of data that any column or variable can store in MS SQL Server. What is the data type? When you create any table or...

PreambleMS SQL Server is a client-server architecture. MS SQL Server process starts with the client application sending a query.SQL Server accepts,...

First the basics: what is the master/slave?One database server (“master”) responds and can do anything. A lot of other database servers store copies of all...

PreambleAtom Hopper (based on Apache Abdera) for those who may not know is an open-source project sponsored by Rackspace. Today we will figure out how to...

PreambleMongoDB recently introduced its new aggregation structure. This structure provides a simpler solution for calculating aggregated values rather...

FlexibilityOne of the most advertised features of MongoDB is its flexibility. Flexibility, however, is a double-edged sword. More flexibility means more...

PreambleSQLShell is a cross-platform command-line tool for SQL, similar to psql for PostgreSQL or MySQL command-line tool for MySQL.Why use it?If you...

PreambleWriting an application on top of the framework on top of the driver on top of the database is a bit like a game on the phone: you say “insert...

PreambleOracle Coherence is a distributed cache that is functionally comparable with Memcached. In addition to the basic function of the API cache, it...

PreambleIBM pureXML, a proprietary XML database built on a relational mechanism (designed for puns) that offers both relational ( SQL / XML ) and...

What is PostgreSQL array? In PostgreSQL we can define a column as an array of valid data types. The data type can be built-in, custom or enumerated....

PreambleIf you are a Linux sysadmin or developer, there comes a time when you need to manage an Oracle database that can work in your environment.In this...

PreambleStarting with Microsoft SQL Server 2008, by default, the group of local administrators is no longer added to SQL Server administrators during the...