REQUEST COMPLIMENTARY SQLS*PLUS LICENCE

Solutions for tempdb database in SQL Server

Tempdb is a critical system database in Microsoft SQL Server. Its unique feature is that it is a shared resource that all users of an SQL Server instance access to place temporary user objects, such as tables, table variables, time indexes, cursors and function results with a tabular value.

It is also used to host internal system objects for work tables, hashes, sorting, temporary storage of large objects, merging and hashing operations, and other system needs. The intensity of tempdb database usage in SQL Server can be extremely low or constantly high. It depends on many factors, such as the size of the database, the quality and efficiency of the application and database code, the structure of tables, the use of temporary tables or tabular variables, the use of cursors and functions with a tabular value and the load.

In any case, those responsible for the construction and administration of SQL Server environment should try to make tempdb as productive as possible, taking into account the peculiarities of development, scaling factors, budget and intended use. The key condition in this case is to provide acceptable characteristics of the storage.

Recommendations on configuring tempdb, number and size of files, selection of storage for tempdb and an example from practice.

In many cases, database administrators who are required to maintain an optimal level of SQL Server performance are not the specialists who have prepared the servers. With a favorable layout, they will have enough influence to offer recommendations on the levels of processor load, RAM and data storage. Perhaps their opinion will be listened to even when choosing equipment. However, as more and more workloads are moved to cloud platforms such as Microsoft Azure and Amazon AWS, most key infrastructure solutions are leaving the domain of the database administrator.

This article should serve as a reminder that when making a decision to move to the cloud, infrastructure considerations cannot be completely ignored. Companies still have infrastructure issues that are critical even when working in a cloud environment where options are limited.

Tempdb configuration recommendations

Obviously, it is extremely important to have a properly configured tempdb database in SQL Server from the minute the SQL Server installation is completed. Tempdb is actively used in sorting, hashing and merging operations. It is also used in any process with temporary objects, so it represents a bottleneck for a significant number of SQL Server applications. You need to prepare the right number of files of the right size, and on the fastest disk available. All these factors are easy to configure or correct, especially in the “cloud” implementations. Do not forget that tempdb must always be located on its own dedicated drive.

Recommendations on the number of tempdb files in SQL Server

The decision about the number of data files prepared for the tempdb database in SQL Server depends on the number of logical cores of the processor. For instances of SQL Server working with less than eight logical cores (if any), there should be a ratio of 1:1 between the number of logical cores and data files placed in tempdb. When there are eight or more logical cores, you should first prepare eight data files. If there is competition for the allocated resources in tempdb (this is manifested by increasing the value of PAGELATCH_UP for the waiting resource placed in tempdb), add four data files each until this stops.

Recommendations on file sizes in SQL Server

The size of the tempdb database in SQL Server depends on many factors, such as the size of user databases, the efficiency of the program code and the relational model. Other factors should also be taken into account, such as sorting rebuilt indexes in tempdb, using version storage to reduce competition, and using certain types of functions.

First of all, it is important to set up all the data files for increment with the same interval. During development and testing, you will be able to collect metrics characterizing the intended use of tempdb and implement them when implementing the database into production. Determining the size of tempdb files is a bit more difficult at first, but the cloud preparation capabilities make it easier, since storage preparation is usually an interactive process in the cloud. If more space is needed, it can be allocated without downtime. My main goal when sizing a file for tempdb (and most other databases) is to try to bring my data and log files up to the maximum predicted size in advance. I don’t rely on automatic magnification, but I do provide this option by providing additional space or issuing warnings in such a way that I have plenty of time to allocate space on demand.

Selecting storage for tempdb

It remains to decide on the storage class for the tempdb database in SQL Server. This decision is the easiest to forget, especially for SQL Server cloud instances. Most cloud providers provide storage with different performance levels. For example, Microsoft Azure and Amazon AWS have many options for SSDs along with hard drives. For most cloud instances, there are two options to achieve the IOPS target. Depending on the vendor, you can prepare a drive class with an IOPS based on the size of the drive in gigabytes, or you can purchase a drive class that provides a certain minimum level of IOPS. There is also an increase in IOPS, if the selected level is not acceptable for a working application, this is another advantage of cloud instances of SQL Server. Most storage changes associated with correcting SQL Server IOPS are interactive operations. Adjusting a specified IOPS up or down usually does not result in a service restart or server reboot.

Case Study

Recently, one of my clients has faced a number of performance issues in a production environment hosted by AWS. There were many technical shortcomings and it would have taken months of work to fix them. However, as I was troubleshooting, I noticed a characteristic that could bring a quick win: tempdb delay. The delay shows how long the incoming and outgoing calls to the disk subsystem are lagged. In essence, a delay is the time in milliseconds (ms) between sending a request to a data item on a disk and receiving that data item. A delay affects the bandwidth achieved by this storage class, depending on the model or settings, as well as the number of requests in the queue for access to objects on disk.

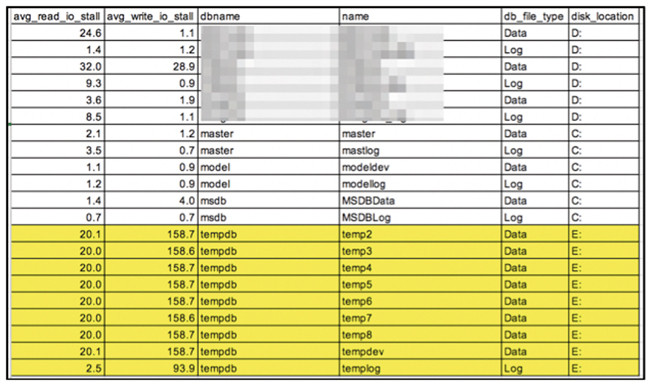

Screen 1 shows the disc delay data obtained during the initial SQL instance evaluation.

Screen 1. Initial SQL Server disk delay

I made a number of conclusions about the data elements, simply based on the delay indicators obtained from a single request to a dynamic control object sys.dm_io_virtual_file_stats:

- The data and log files for user databases are on the same volume, resulting in mixed read and write access that conflicts with each other.

- The system databases are located on the same disk as the operating system. Usually I prefer to allocate a special disk for system databases (apart from tempdb), but this is not a top priority.

- The latency of the disk dedicated to tempdb has been overwhelming compared to other disks.

The delay in reading the main data file (32.0ms on drive D, compared to 20ms for any tempdb data files on drive E) caused questions until I considered the number of read and write operations. The number of read operations for the main user database is 11 times less than for tempdb for the same period of time. Write operations for the same database were half the number of such operations for tempdb. Also consider the RAM factor: a user database can be placed 10 times in a buffer pool.

Most of the user database pages are read into the buffer pool once, and multiple I/O cycles are only addressed to “dirty” pages. The pattern of access to tempdb is completely different and less constant due to the way tempdb is used. The latency values for the user database do not match the IOPS target I set for an optimally running server (no more than 5ms), but their improvement is far from as critical as correcting the characteristics of tempdb.

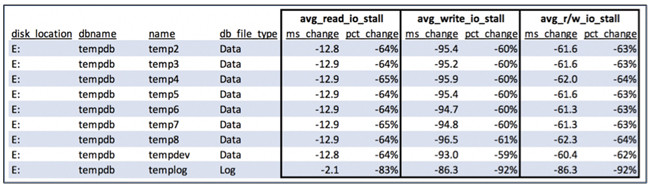

In a closer look, I found that the client had prepared all its disks in the universal AWS gp2 storage and ignored the io1 storage for business critical applications, particularly databases. The five-minute operation of converting a disk to io1, without making any other settings in the client environment, resulted in the delay changes shown in screen 2.

Screen 2. Optimize the delay only by changing the drive type

By changing only the class of drive and setting higher IOPS and packet transfer rates, we were able to improve read and write latency by more than 60% on the tempdb volume. This resulted in an overall reduction in lockups due to requests with transaction dependencies involving tempdb.

Are you ready to take the time to analyze tempdb characteristics? Perhaps in your case there are simple and fast ways to solve problems too.

How to configure tempdb in SQL Server

MORE NEWS

PreambleNoSql is not a replacement for SQL databases but is a valid alternative for many situations where standard SQL is not the best approach for...

PreambleMongoDB Conditional operators specify a condition to which the value of the document field shall correspond.Comparison Query Operators $eq...

5 Database management trends impacting database administrationIn the realm of database management systems, moreover half (52%) of your competitors feel...

The data type is defined as the type of data that any column or variable can store in MS SQL Server. What is the data type? When you create any table or...

PreambleMS SQL Server is a client-server architecture. MS SQL Server process starts with the client application sending a query.SQL Server accepts,...

First the basics: what is the master/slave?One database server (“master”) responds and can do anything. A lot of other database servers store copies of all...

PreambleAtom Hopper (based on Apache Abdera) for those who may not know is an open-source project sponsored by Rackspace. Today we will figure out how to...

PreambleMongoDB recently introduced its new aggregation structure. This structure provides a simpler solution for calculating aggregated values rather...

FlexibilityOne of the most advertised features of MongoDB is its flexibility. Flexibility, however, is a double-edged sword. More flexibility means more...

PreambleSQLShell is a cross-platform command-line tool for SQL, similar to psql for PostgreSQL or MySQL command-line tool for MySQL.Why use it?If you...

PreambleWriting an application on top of the framework on top of the driver on top of the database is a bit like a game on the phone: you say “insert...

PreambleOracle Coherence is a distributed cache that is functionally comparable with Memcached. In addition to the basic function of the API cache, it...

PreambleIBM pureXML, a proprietary XML database built on a relational mechanism (designed for puns) that offers both relational ( SQL / XML ) and...

What is PostgreSQL array? In PostgreSQL we can define a column as an array of valid data types. The data type can be built-in, custom or enumerated....

PreambleIf you are a Linux sysadmin or developer, there comes a time when you need to manage an Oracle database that can work in your environment.In this...

PreambleStarting with Microsoft SQL Server 2008, by default, the group of local administrators is no longer added to SQL Server administrators during the...