REQUEST COMPLIMENTARY SQLS*PLUS LICENCE

SQL Server Architecture

Preamble

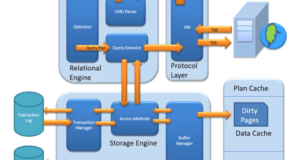

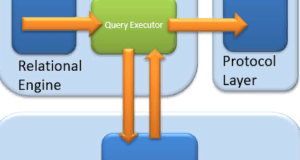

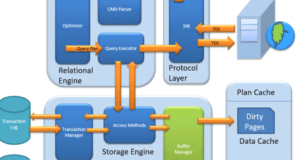

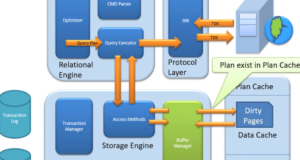

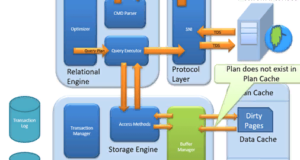

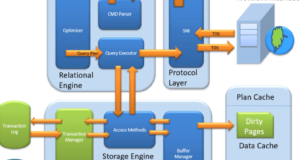

MS SQL Server is a client-server architecture. MS SQL Server process starts with the client application sending a query.SQL Server accepts, processes, and responds to the query with processed data. Let us discuss in detail the entire architecture shown below.

As shown in the diagram below, there are three main components in SQL Server architecture:

- Protocol level

- Relational Motor

- Storage motor

Protocol level

MS SQL SERVER PROTOCOL LAYER supports 3 types of client-server architecture. We will start with “Three types in client-server architecture”. Which supports MS SQL Server.

Shared memory

Let’s review the conversation scenario in the early morning..

Mom and Tom – Here Tom and his mother were in the same logical place, that is in their house. Tom could ask for coffee, and his mother could serve it hot.

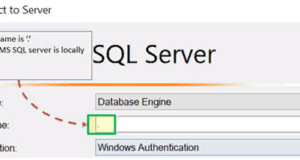

MS SQL Server – Here the MS SQL server provides a common memory protocol. Here CLIENT and MS SQL server work on the same machine. Both can communicate via the shared memory protocol.

Analogy: allows comparing the entities in the two above scenarios. We can easily match Tom with the client, Mom with the SQL server, Home to Machine, and verbal communication with the shared memory protocol.

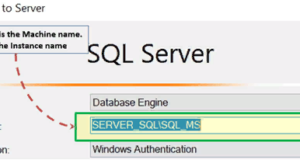

From the configuration and installation desk:

To connect to a local database – in SQL Management Studio, the “Server name” parameter can be

"Local"

«127.0.0.1»

"Machine \ Instance"

TCP / IP

And now let’s see in the evening, Tom is in a festive mood. He wants a coffee, ordered at a famous coffee shop. The coffee shop is 10 km away from his house.

Here Tom and Starbuck are in different physical places. Tom is at home and Starbucks is on a busy trading floor. They communicate through a cellular network. Similarly, MS SQL SERVER provides the ability to communicate over the TCP / IP protocol, where CLIENT and MS SQL Server are remote from each other and installed on a separate machine.

Analogy: allows comparing the entities in the above two scenarios. We can easily match Tom with the client, Starbuck with the SQL Server, Home / Market place with a remote location, and finally with the cellular network over TCP / IP.

Notes from the Configuration / Installation panel:

- In SQL Management Studio – to connect via TCP \ IP the “Server name” parameter must be “Computer \ Server instance”.

- SQL Server uses port 1433 in TCP / IP.

Named pipes

Now, finally, at night, Tom wanted to drink a light green tea, which was very well prepared by her neighbor Sierra.

Here Tom and his neighbor Sierra are in the same physical place, being each other’s neighbor. They communicate through the Intra network. Similarly, MS SQL SERVER provides the ability to communicate via the Named Pipe protocol. Here, the CUSTOMER and MS SQL SERVER are connected via the local network.

Analogy: Lets map entities in the above two scenarios. We can easily map Tom to Client, Sierra to SQL server, Neighbor to LAN, and finally Intra network to Named Pipe Protocol.

Notes from the desk of Configuration/installation:

For Connection via Named Pipe. This option is disabled by default and needs to be enabled by the SQL Configuration Manager.

What is TDS?

Now that we know that there are three types of client/server architecture, let’s take a look at TDS:

- TDS stands for “Tabular Data Stream”.

- All three protocols use TDS packages. TDS is encapsulated in network packets. This allows data to be transferred from the client machine to the server machine.

- TDS was first developed by Sybase and now belongs to Microsoft.

Relational engine

The relational engine is also known as the query processor. It has SQL Server components that determine what exactly a query should be made and how it should best be executed. It is responsible for executing custom queries by querying data from the storage engine and processing the returned results.

As shown in the Architectural Diagram, there are 3 main components of the relational engine. Let’s have a closer look at the components:

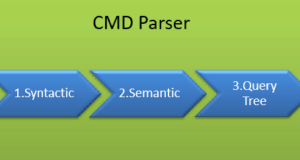

CMD parser

Data received from the protocol level is then transferred to the Relational Engine. “CMD Parser” is the first component of the relational engine, which receives data queries. The main task of CMD Parser is to check the query for syntactic and semantic errors. Finally, it generates a query tree. Let us have a closer look.

Syntax check:

- Like any other programming language, MS SQL also has a predefined set of Keywords. In addition, SQL Server has its own grammar, which is understood by the SQL server.

- SELECT, INSERT, UPDATE, and many others belong to predefined lists of MS SQL Keywords.

- CMD Parser performs syntax checking. If user input does not follow syntax or language grammar rules, it returns an error.

Example: Let’s say that the Russian went to a Japanese restaurant. He orders fast food in Russian. Unfortunately, the waiter understands only Japanese. What will be the most obvious result?

Answer: the waiter can no longer process the order.

There should be no deviations either in grammar or in the language that the SQL server accepts. If there is, the SQL-server will not be able to process it and therefore will return an error message.

We will learn more about the MS SQL query in the following tutorials. However, we will consider below the most basic query syntax as follows

SELECT * from <TABLE_NAME>;

Now, to get an idea of what the syntax does, let’s say, if the user performs a basic query, as shown below:

SELECR * from <TABLE_NAME>

Note that instead of ‘SELECT’ the user typed ‘SELECR’.

Result: The CMD analyzer will analyze this operator and generate an error message. Since “SELECR” does not correspond to a predefined keyword and grammar. Here CMD Parser expected “SELECT”.

Semantic check:

- This is performed by the normalizer.

- In its simplest form, it checks if the column name and the requested table name exist in the scheme. And if it exists, connect it to Query. This is also known as Binding.

- The complexity increases when user queries contain VIEW. The normalizer performs the substitution with a view definition saved inside and many more.

Let’s solve this with the example below –

SELECT * from USER_ID

Result: The CMD parser will analyze this operator for semantic checking. The parser will generate an error message because Normalizer will not find the requested table (USER_ID) because it does not exist.

Create a query tree:

- At this stage, another execution tree is created where you can execute a query.

- Note that all different trees have the same desired result.

Optimizer

The work of the optimizer is to create a plan of execution of the user request. This is the plan that will determine how the user request will be executed.

Please note that not all requests are optimized. Optimization is performed for DML (Data Change Language) commands such as SELECT, INSERT, DELETE, and UPDATE. Such queries are first flagged and then sent to the optimizer. DDL commands such as CREATE and ALTER are not optimized, but instead are compiled into an internal form. The cost of the query is calculated based on factors such as CPU load, memory usage, and I/O needs.

The role of the optimizer is to find the cheapest, not the best and most cost-effective execution plan.

Before going into more technical details of the Optimizer, let’s look at a real-life example:

Example:

Let’s say that you want to open an online bank account. You already know about one bank, which takes a maximum of 2 days to open an account. But you also have a list of 20 other banks, which may or may not take less than 2 days. You can start working with these banks to determine which banks take less than 2 days. Now you may not find a bank that takes less than 2 days, and because of the search activity itself, extra time is lost. It would be better to open an account with the very first bank.

Conclusion: it is more important to choose wisely. To be precise, choose which option is better rather than the cheapest.

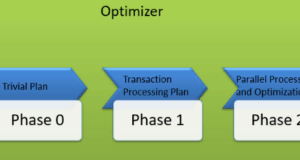

Similarly, MS SQL Optimizer works on built-in comprehensive / heuristic algorithms. The goal is to minimize the time it takes to execute a query. All optimizer algorithms are Microsoft property and are a secret. In spite of this, below are the high-level steps performed by MS SQL Optimizer. Optimizer searches are performed in three steps, as shown in the diagram below:

Step 0: search for a trivial plan:

- This is also called the pre-optimization stage.

- In some cases, there may be only one practical, workable plan known as a trivial plan. It is not necessary to create an optimized plan. The reason is that searching for more will lead to finding the same execution plan at runtime. This is also due to the additional cost of finding an optimized plan, which was not needed at all.

- If no trivial plan is found, phase 1 begins.

Stage 1: search for transaction processing plans

- This includes finding a simple and complex plan.

- Simple plan search: the past data of the column and index involved in the query will be used for statistical analysis. This usually consists of but is not limited to, one index per table.

- However, if a simple plan is not found, a more complex plan is searched. It includes several indexes per table.

Step 2: parallel processing and optimization

- If none of the above strategies work, the Optimizer looks for possibilities of parallel processing. This depends on the capabilities and configuration of the machine.

- If this is still not possible, the final optimization phase starts. Now, the ultimate goal of optimization is to find all other possible options to best fulfill the request. The final phase of optimization The algorithms are owned by Microsoft.

Query Executor

The request executor calls the access method. It provides an execution plan for the data selection logic needed to execute the request. As soon as the data are received from Storage Engine, the result is published at the protocol level. Finally, the data is sent to the end-user.

Storage Engine

The operation of the storage mechanism is to store data in a storage system, such as a disk or SAN, and retrieve the data if necessary. Before we delve into the storage mechanism, let’s take a look at how data is stored in the database and the types of files available.

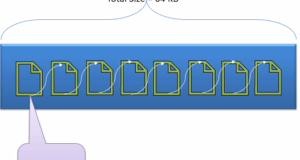

Data file and extent:

A data file that physically stores data in the form of data pages, with each data page measuring 8 KB, forming the smallest unit of storage in SQL Server. These data pages are logically grouped to form extents. No object is assigned a page in SQL Server.

Object content is executed through extents. The page has a section called “Page header” with size 96 bytes that contain metadata of page information, such as page type, page number, size of space used, size of free space, and a pointer to the next page and the previous page. and so on.

File types

Main file

- Each database contains one main file.

- All important data related to tables, views, triggers, etc., are stored here.

- There is an extension. mdf usually but there can be any extension.

Secondary file

- The database may or may not contain several secondary files.

- This is optional and contains user-specific data.

- There is an extension. ndf usually, but it can be any extension.

Log file

- Also known as Recording in front of magazines.

- There is an extension. LDF

- Used to manage transactions.

- It is used to recover from any unwanted instances. Perform the important task of reverting to uncommitted transactions.

The Storage Engine has 3 components; let us look at them in detail.

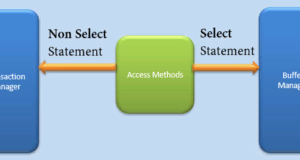

Access method

It acts as an interface between the requestor and Buffer Manager / Transaction Logs.

The access method itself does not perform any actions.

The first action is to determine if a query is a query:

- Select the request (DDL)

- Operator without choice (DDL and DML)

Depending on the result, the access method performs the following steps:

- If the query is DDL, SELECT operator, the query is sent to Buffer Manager for further processing.

- And if a DDL query, NON-SELECT operator, the query is transferred to the Transaction Manager. This mainly includes the UPDATE operator.

Buffer manager

The Buffer Manager manages the main functions of the modules below:

- Cache plan

- Data parsing: buffer cache and data storage

- Dirty page

In this section, we will learn what Plan, Buffer, and Data cache are. We will look at the dirty pages in the Transactions section.

Cache plan

Existing request plan: the buffer manager checks if the execution plan is in the saved cache of the plan. If so, the query plan cache and the associated data cache are used.

First cache plan: where does the existing cache plan come from?

If the first query execution plan is executed and complex, it makes sense to keep it in the plane cache. This will ensure higher availability when the next time the SQL server receives the same query. Thus, it is nothing more than a query that is saved by the Plan if it is executed for the first time.

Syntactic data analysis: buffer cache and data storage

The buffer manager provides access to the necessary data. Two approaches are possible below, depending on whether the data is in the data cache or not.

Buffer cache – soft parsing:

The buffer manager searches for data in the buffer in the data cache. If this data is present, this data is used by Query Executor. This improves performance by reducing the number of I/O operations when retrieving data from the cache compared to retrieving data from the data warehouse.

Storage of data is hard analysis:

If data are not available in Buffer Manager, you need to search for data in the data warehouse. If it also stores data in a data cache for further use.

Dirty page

It is stored as transaction manager processing logic. You can find out more about it in the section “Transactions manager”.

Transaction Manager

The Transaction Manager is called when the access method determines that the request is an operator without choice.

Log Manager

- The Log Manager tracks all updates made in the system through the transaction logs.

- The journals have a sequential number of journals with a transaction identifier and a record of data changes.

- This is used to track the completed transaction and rollback the transaction.

Lock Manager

- During a transaction, associated data in the data warehouse is in a locked state. This process is processed by the lock manager.

- This process ensures consistency and isolation of data. Also known as ACID properties.

Execution process

- The Journal Manager shall start the registration and the Lock Manager shall block the related data.

- The copy of the data shall be saved in the buffer cache.

- A copy of the data to be updated shall be saved in the Car Registration Journal buffer and all events shall update the data in the data buffer.

- The pages on which the data is stored shall also be called “dirty pages”.

- Checkpoint registration and queue recording: This process starts and marks all pages from the dirty pages to the disk, but the page remains in the cache. The frequency is about 1 run per minute. But the page is first moved to the log file data page from the buffer log. This is known as entry to the journal.

- Lazy writer: a dirty page may remain in memory. When an SQL server detects a huge load and a new transaction requires buffer memory, it releases the dirty pages from the cache. It runs on LRU, the smallest algorithm recently used to clear a page from buffer pool to disk.

Summary:

- There are three types of client-server architecture: 1) Shared memory 2) TCP / IP 3) Named channels

- TDS, developed by Sybase and currently owned by Microsoft, is a package that is encapsulated in network packets to transfer data from a client computer to a server computer.

- The relational engine has three main components:

- CMD Parser: it is responsible for syntax and semantic errors and finally generates a query tree.

- Optimizer: the role of the Optimizer is to find the cheapest, not the best, and most economical execution plan.

- Request Executer: The Request Executer invokes the access method and provides an execution plan for the data extraction logic needed for execution.

4. There are three file types: Primary, Secondary, and Log Files.

5. Storage mechanism: has the following important components

- Access method: this component Defines whether the query is “Select” or “Not selected”. Calls Buffer and Transfer Manager respectively.

- Buffer Manager: Buffer Manager manages the main functions for Plan Cache, Darling & Dirty Page.

- Transaction Manager: This is a transaction manager without selections through log and lock managers. It also facilitates the important implementation of Write Ahead and Lazy Writers logging.

Enteros

About Enteros

IT organizations routinely spend days and weeks troubleshooting production database performance issues across multitudes of critical business systems. Fast and reliable resolution of database performance problems by Enteros enables businesses to generate and save millions of direct revenue, minimize waste of employees’ productivity, reduce the number of licenses, servers, and cloud resources and maximize the productivity of the application, database, and IT operations teams.

MORE NEWS

PreambleNoSql is not a replacement for SQL databases but is a valid alternative for many situations where standard SQL is not the best approach for...

PreambleMongoDB Conditional operators specify a condition to which the value of the document field shall correspond.Comparison Query Operators $eq...

5 Database management trends impacting database administrationIn the realm of database management systems, moreover half (52%) of your competitors feel...

The data type is defined as the type of data that any column or variable can store in MS SQL Server. What is the data type? When you create any table or...

First the basics: what is the master/slave?One database server (“master”) responds and can do anything. A lot of other database servers store copies of all...

PreambleAtom Hopper (based on Apache Abdera) for those who may not know is an open-source project sponsored by Rackspace. Today we will figure out how to...

PreambleMongoDB recently introduced its new aggregation structure. This structure provides a simpler solution for calculating aggregated values rather...

FlexibilityOne of the most advertised features of MongoDB is its flexibility. Flexibility, however, is a double-edged sword. More flexibility means more...

PreambleSQLShell is a cross-platform command-line tool for SQL, similar to psql for PostgreSQL or MySQL command-line tool for MySQL.Why use it?If you...

PreambleWriting an application on top of the framework on top of the driver on top of the database is a bit like a game on the phone: you say “insert...

PreambleOracle Coherence is a distributed cache that is functionally comparable with Memcached. In addition to the basic function of the API cache, it...

PreambleIBM pureXML, a proprietary XML database built on a relational mechanism (designed for puns) that offers both relational ( SQL / XML ) and...

What is PostgreSQL array? In PostgreSQL we can define a column as an array of valid data types. The data type can be built-in, custom or enumerated....

PreambleIf you are a Linux sysadmin or developer, there comes a time when you need to manage an Oracle database that can work in your environment.In this...

PreambleStarting with Microsoft SQL Server 2008, by default, the group of local administrators is no longer added to SQL Server administrators during the...

PreambleWhen administering PostgreSQL database servers, one of the most common tasks you will probably perform is enumerating databases and their tables....