REQUEST COMPLIMENTARY SQLS*PLUS LICENCE

Windows 2012 Server

Microsoft Windows Server 2012 – After four years of development, on September 4, 2012, Microsoft announced the availability of a new version of Windows Server.

Historically, the operating systems of the Windows Server line are operating systems designed to manage server hardware and have the required functionality. Also, these server operating systems contain specialized services (software components) designed for organization, monitoring and management of IT infrastructure of the enterprise, ranging from management of IP address space and user accounts to the organization of fault-tolerant network services.

If we look at the IT infrastructure of the enterprises of the sample of 10 years ago, then in a great number of cases we will see the so-called “on-premise” model, when the server hardware was purchased by enterprises in the property, on each server an instance of the operating system was deployed, its complete services were configured, other software was installed, implementing additional functionality.

In this classical model of an IT infrastructure of the enterprise interaction, as a rule, was under construction under the scheme the client-server, and under the client in overwhelming majority of cases it was understood the personal computer. That is the client personal computer as the device, at network level interacted through a local network with a server, and at application level certain client application interacted with corresponding server.

At the same time, we are now seeing the industry transform this model into a model of ‘connected devices and continuous services’. More and more users are working with an increasing variety of Internet-connected devices: smartphones, tablets, laptops, desktops and even smart TVs.

On the enterprise “servers” side, users are no longer just waiting for server applications that interact with a single device, but continuously, 24×7×365, functioning services: cloud, smart, reliable, fast, properly processing and able to synchronize data between all devices. In other words, we need a server operating system that would allow us to build such services. The data amount is also growing: more and more information is accumulated in relational databases, text documents and spreadsheets.

In the conditions of such transformation Microsoft has set a goal to release a real “cloud” operating system – a platform of scale not a single server any more, but a data processing center (DPC) with uniform approaches and tools for managing and developing applications in a private, partner, global cloud and hybrid variants. Similar to the way Microsoft redesigned the Windows client operating system, the Windows Server server operating system paradigm has undergone a major rethinking.

Key building blocks here are Windows Server 2012, Windows Azure and System Center 2012 SP1.

By “cloud operating system”, Microsoft understands this as the four groups of requirements for a server operating system:

- Transformation of data centers. It is necessary to be able to take all data center resources (storage, network, computing capacity), divide them among cloud services, and ensure high load (efficient use) of these resources. You need to be able to scale flexibly, that is, for any particular service you need to be able to allocate additional resources to it, but only for the time when it is needed. You need to be able to build an infrastructure that runs on always-up and always-on (always on and always available). It is necessary to be able to automate data center management tasks through APIs and self-service portals.

- It is necessary to be able to place modern applications on top of such infrastructure. It is necessary to have a large set of working services, which allow building social, mobile applications and applications for processing extra large data sets, i.e. to support all modern trends. Businesses need to have the flexibility in tools, in the development environment to build these applications quickly. They need a fast development cycle that brings developers and managers together.

- Microsoft’s goal is to support the BYOD (bring your own device) trend in enterprises, while providing the necessary control and management from the IT department.

- It was necessary to support the ability to process and store any data sets with any storage paradigm: both SQL and NoSQL, to jointly process enterprise data and data from external structured sources, creating new opportunities.

The result was the construction of a cloud operating system and platform. The new operating system can be deployed in its data center and can be used as a service from a partner data center or from global Windows Azure, providing a unified approach to virtualization, management infrastructure, application development infrastructure, data management and identity services.

However, the new operating system brings many innovations and improvements for those who do not plan to migrate to the cloud as well.)

Editorial and licensing in Windows Server 2012 lineup

Usually, licensing issues, editorial offices and their limitations are among the most difficult for all manufacturers. With Windows Server 2012, the structure is simplified and unified compared to the previous generation.

There are 4 editions available in the Windows Server 2012 line:

| Editorial | Main destination | Main features | Licensing model | Price on “Open No Level (NL) ERP” terms |

| Datacenter | High-density private and hybrid virtualized environments | Full-featured Windows Server. The price includes the ability to run an unlimited number of virtualized instances on a single physical server | The price is set for physical processors + client access licenses (purchased separately) | $4,809 for two physical processors (number of cores and threads is not limited). If used on more than two processor servers, additional licenses are required |

|---|---|---|---|---|

| Standard | Non-virtualized or virtualized low-density environments | Full-featured Windows Server. The price includes the ability to run two virtualized instances on the same physical server | The price is set for physical processors + client access licenses (purchased separately) | $882 for two physical processors (number of cores and threads is not limited). If used on more than two processor servers and/or more than two virtualized instances, additional licenses are required |

| Essentials | For small business | Limited Windows Server functionality. The price does not include the ability to run additional virtualized instances. A maximum of 25 users. Maximum two physical processors (number of cores and threads is not limited). | The price is set for server editing, additional user licenses do not need to be paid. | $501 |

| Foundation | Economical Editorial | Limited Windows Server functionality. The price does not include the ability to run additional virtualized instances. A maximum of 15 users. Maximum one physical processor (number of cores and threads is not limited). | The price is set for server editing, additional user licenses do not need to be paid. | Distributed with equipment only |

Previously included in the Windows Small Business Server (SBS) product line, Windows Home Server will no longer develop as Microsoft has observed that the target audiences of these products (home users, small businesses) are increasingly opting for cloud services for their tasks, such as e-mail and collaboration and backup, instead of deploying their own infrastructure.

Also previously included in the Enterprise, High-Performance Computing (HPC) and Web Server product lines are not available in the new generation.

An important change is that the functionality of Datacenter and Standard editions is the same – now you can build high availability and failover clusters with a license to Standard.

Virtualization Platform (Hyper-V)

Since virtualization is the cornerstone of the cloud, many new things have emerged in this area.

We have taken into account the need for large companies and cloud providers to better manage their data centers, considering the consumption of resources: computing, storage, network.

Scalability

If an enterprise has not had enough power from previous generation virtualization solutions, Windows Server 2012 may be the way out because Hyper-V version 3 supports it:

- up to 320 logical processors per physical server and up to 64 processors in the virtual machine;

- up to 4 TB of RAM per physical server and up to 1 TB of memory in the virtual machine;

- up to 64 TB of hard disk capacity is supported in the virtual machine;

- Hyper-V clusters with up to 64 nodes and up to 8000 virtual machines per cluster of up to 1024 machines per node.

It seems to me that only a very small fraction of all possible loads running on x86-64 servers cannot be virtualized given these limitations.

Live Migration

Live Migration – the ability to move virtual machines between physical servers without interrupting service delivery to clients – with the advent of Windows Server 2012, it became possible for more than one virtual machine at a time. In fact, the number of Virtual Machines migrating live between hosts at the same time depends on hardware capacity. With the new version of SMB, SMB3 (see below), and High Performance Network Interface (NIC teaming) aggregation, 120 VMs can be migrated simultaneously.

Also, by supporting SMB3 placement of virtual hard drives on shared folders, it is now possible to perform Live Migration without using a shared cluster storage (CSV = clustered shared volume).

Microsoft says that it’s possible to perform shared-nothing migration if you only have an Ethernet cable.

Networking capabilities

Significant improvements have also been made to the Hyper-V network infrastructure. If you install serious network adapters in the physical virtualization host with a lot of hardware-level features such as IPSec Offload, it is very likely that with Hyper-V 3 you can get all these ‘tastes’ inside the virtual machines.

The virtual switch that controls the work of the virtual machines’ network adapters has been seriously redesigned. The main improvement is a new open architecture that allows third party vendors to use documented APIs to write their extensions that implement packet inspection and filtering functionality, proprietary swapping protocols, firewall and intrusion detection systems.

Currently, one solution using this architecture has already been released, the Cisco Nexus 1000V virtual switcher.

At the same time, even without the use of third-party solutions virtual switch has become more powerful – for example, it is possible to control the bandwidth of individual virtual machines.

Other Hyper-V mechanisms

Many other improvements have also been made: Hyper-V has improved support for NUMA; a new VHDX virtual hard drive format has been introduced that supports native 4K hard drives and large drives; and Dynamic Memory has been improved with the addition of a Minimum Memory option that allows the hypervisor to take memory from virtual machines if it’s not enough to run. It’s now possible to make incremental backups of running virtual machines.

Network subsystem

IPv6

Windows Server 2012 rewrites the TCP/IP protocol stack implementation. The primary protocol is now IPv6, while IPv4 at the internal software level is handled as a subset of IPv6.

The priority use of IPv6 is also aimed at the implementation of many higher-level protocols and services – it’s no wonder when IPv6 and IPv4 will be chosen to work with IPv6. For example, the Domain Name Resolution Service DNS in the first place tries to get exactly the IPv6 address of the host.

Let us stop at some of the features of IPv6:

- The first thing that is mentioned by all is a larger address space, which will give your own unique in the universe IPv6-address ≈3,4-1038 nodes. At the same time, the existing rules of allocation of IPv6-addresses, taking into account their structure, prescribe to allocate each applicant for its own block of IPv6-addresses to the enterprise subnetwork /64, ie, the enterprise gets the opportunity to give their servers, client PCs and devices 264 unique globally routed IPv6-addresses – 232 times more than it was in all together IPv4-address space of the Internet;

- possibility to give to each node of a network (to each device) own globally routed IPv6-address will make much easier work of services which should from the Internet proactively deliver messages to addressees within corporate (and house) networks – basically these are various applications of a segment of the unified communications: messengers, communicators, etc. Cultural shock: PAT (NAT) – no more! For client applications, on the other hand, there will be no need to constantly maintain a TCP session open via PAT (NAT), and for the server, respectively, to keep them in the thousands/fifty thousand;

- IPv6 allows to reduce the load and, consequently, the requirements to network equipment, especially it will be noticeable on the high loaded ISP equipment: the standard removed fragmentation, no checksums do not need to be read, the length of the headers – fixed;

- IPv6 includes mandatory node support for IPSec technology. Now there is a large number of protocols developed in the early days of the Internet, for each of which had to later develop and standardize safe analogues – some unique for each protocol “wrappers” (HTTP/HTTPS, FTP / SFTP, etc.). IPv6 will allow you to get away from this “zoo” and uniformly protect the connection of two nodes at layer 3 of the network model for any protocol;

- IPv6 potentially enables mobile devices to be roamed between wireless networks of different operators (Wi-Fi, 3G, 4G, etc.) without disrupting client software sessions such as voice traffic of SIP clients.

In particular, a technology included in Windows Server, such as DirectAccess, allows you to organize secure remote access of the client computer to the corporate LAN transparently for the user. On the user side, there is no need to use any VPN clients or connections. DirectAccess, once configured, is then transparent to the user.

On the technology side, DirectAccess is a Microsoft IPSec VPN implementation based on IPv6. (That doesn’t mean it won’t work over IPv4 transport networks.)

By the way, with Windows Server 2012, it is possible not to deploy a PKI if the DirectAccess clients are Windows 8.

SMB3

Microsoft networks use SMB (Server Message Block) protocols to access file server resources. For quite a long time we have been working with the protocol version 2, and in Windows Server 2012 a new version has been introduced – SMB 3.0.

In fact, SMB 3.0 protocol should not be considered in the context of a network subsystem, but in the context of the storage subsystem – and later we will see why.

In a context of a network subsystem it is possible to tell that SMB 3.0 is sharpened under modern fast local gigabit networks – at its use management of the transport level protocol TCP in such networks is optimized, possibility of application of so-called jumbo-frames Ethernet is considered. It is also customized for IPv6 applications.

Storage subsystem

Large enterprises often use dedicated storage solutions to build their IT infrastructures, achieving a certain level of flexibility: a drive shelf can be used simultaneously by multiple servers, and capacity can be flexibly allocated between them as needed.

At the same time, these specialized solutions are expensive enough for both acquisition and subsequent maintenance. The administration and support of these devices and interfaces (e.g., the Fiber Channel) requires administrators to have the appropriate knowledge and skills.

When designing Windows Server 2012 in Microsoft, the goal was to implement in the server operating system functionality that will provide applications such as SQL Server and Hyper-V with the same level of storage subsystem reliability as specialized solutions using relatively inexpensive (“ingenious”) disk arrays. Moreover, to unify the administration tasks, the implementation does not even use block protocols (such as iSCSI), but works with ordinary file-server protocols such as SMB.

It was reached basically by two mechanisms – application Storage Spaces and report SMB3.

Thus, SMB 3.0 has been taught:

- to make the most of smart shelves, if they are already purchased: use Offloaded Data Transfer (ODX) technology, when the server only sends a command to the shelf to move files, and the shelf is super fast inside itself does and returns the result to the server;

- open more than one TCP session to copy the file to RSS capable NICs (receive side scaling);

use more than one NIC at a time to increase performance: if two Windows Server 2012 (or Windows 8) servers have two NICs each, the replication speed will increase; - Use more than one NIC at a time for fault tolerance: If two Windows Server 2012 (or Windows 8) servers have two NICs at once and one of them fails, the replication process will not stop but will complete successfully;

provide Continuously Available File Server, a true fault-tolerant cluster of file servers*.

A lyrical retreat must be made here. The term Windows Server Failover Clustering has long been mistranslated as “failover cluster”. In fact, it is a highly available cluster. That is, the service provided to the client by a specific node of the cluster, in the case of an abnormal failure of this node, will be interrupted. Further, without the intervention of the administrator, the service will rise on the other node of the cluster and continue to serve the client. The damage to a particular client that has been denied service for a short time depends on the service used and the stage of the session at which the denial occurred.

So, the new Continuously Available File Server is that very “fault-tolerant file server”, i.e. such a cluster device, which acts as a file server, in which the failure of the node serving a particular client at a given time (for example, the client can copy a large file from the server to the local disk), will not cause a service interruption for this client – the file per client will continue to give another node of the cluster.

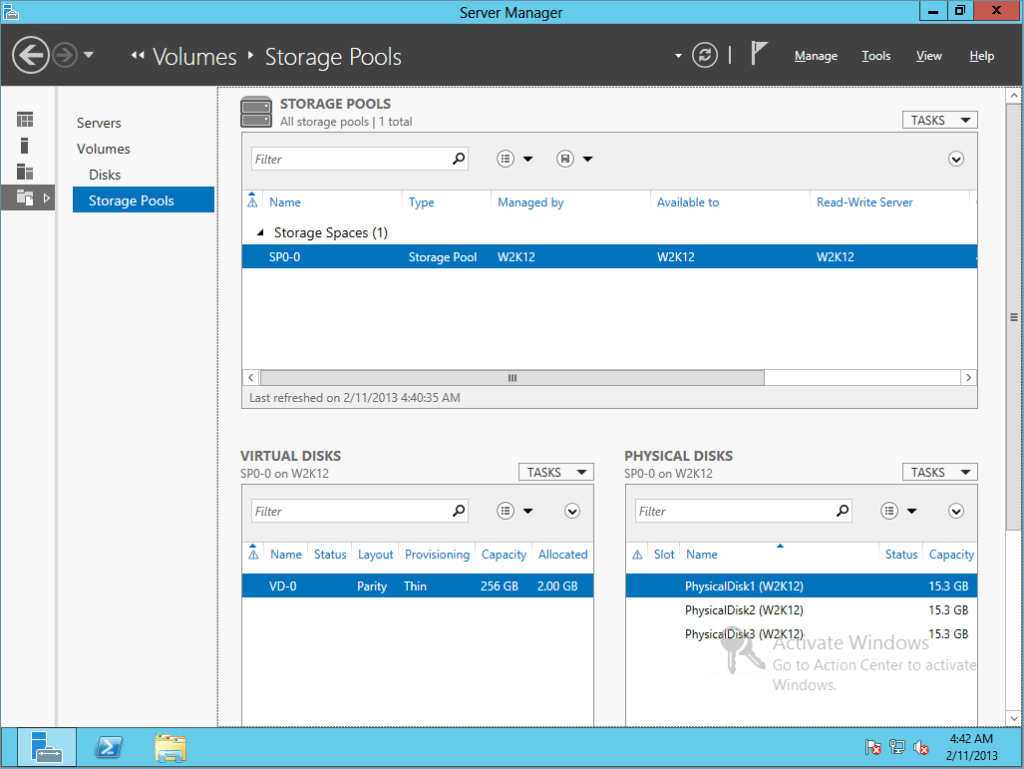

Storage Spaces

Storage Spaces is a new mechanism that appeared in Windows Server 2012.

Its key “trick” is to allow you to organize a highly accessible and scalable storage infrastructure at a much lower total cost of ownership (TCO) than specialized storage solutions.

The idea implemented by this mechanism is as follows. There is a server running Windows Server 2012. It has DAS (directly attached storage) – maybe in the form of SATA or SAS disks located in the case, or maybe in the form of external disk shelves connected via SAS interface. The shelves do not need to provide any extended processing, i.e. there is no need to implement RAID algorithms, it is enough to give JBOD – just a set of disks, i.e. the shelf in this case is just a hardware with power supply, skids and SAS interface.

The Storage Spaces mechanism defines so-called Storage Pools – the basic logical building blocks of a storage system. They include one or more physical disks. At the Storage Pool level, one of the drives can be assigned as a hot spare drive – it will automatically become active when one of the work drives in the Storage Pool fails.

Further, virtual disks are detected inside the Storage Pool. The virtual drive can be built in one of three modes:

- Simple – data will be distributed across physical disks, increasing performance but reducing reliability (some analogue of RAID0);

- Mirror – data will be duplicated on two or three disks, increasing reliability but using inefficient capacity (some analogue of RAID1);

- Parity – data and parity blocks will be distributed across disks, providing a compromise between the first two modes (some analogue of RAID5).

Virtual disks can be assembled at a fixed size on a physical drive, or they can consume space from the Storage Pool as data is stored up to the size specified for the drive.

After creating a virtual disk, the OS offers to create an OS partition on it, under which you can allocate all or part of the virtual disk space, format the partition and assign it a disk letter. In other words, you can say that the Storage Spaces mechanism generates virtual hard disks logically located between the hardware and Disk Manager.

What is the advantage compared to the good old software RAID that has been working in Windows NT since time immemorial?

Wrong, the biggest difference will be the ability to create virtual disks that are larger than the underlying disks with mode in mind. For example, by defining the Storage Pool, which includes three 16GB hard drives, you can create a virtual 120GB drive. As the drive fills up with data and the available physical hard drive capacity runs out, you can add new physical discs to the Storage Pool without changing anything at partition or data level.

Separately, I would like to speak about the performance of the solution. We understand that at a certain level of architecture Storage Spaces is a software organization of a RAID-array. Soft-array does not mean slow or bad at all. For example, the Intel Matrix RAID implementation, although “on the outside” it looks like hardware, actually uses CPU resources to account for the higher levels of RAID. The performance of the various solutions needs to be fully tested, preferably not with abstract synthetic tests, but with tests that simulate or represent a real load.

As for portability of Storage Spaces between servers – that is, it is without problems. If the combat server “fell”, you can connect hard drives to the new one and import the existing configuration of Storage Spaces.

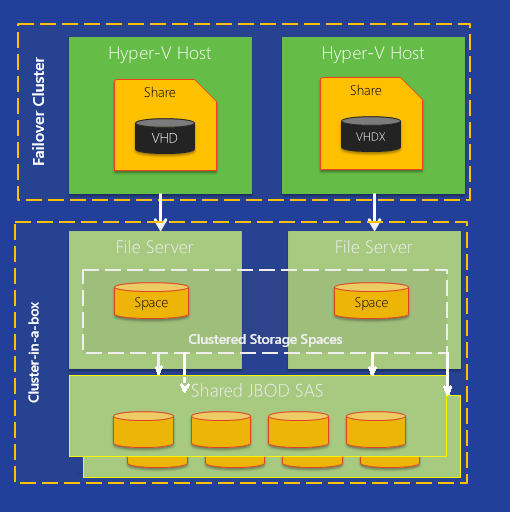

Due to new technologies Storage Spaces and SMB3, among others, the following architecture can be built:

Two Hyper-V hosts provide high availability mode for virtual machines running on them. Virtual hard drives and virtual machine configuration are stored on a dedicated SMB3 sharing folder. The folder, in turn, is dedicated to a continuously accessible file server (failover cluster), which consists of two servers, each of which contains two SAS HBA (host adapters), each of which is connected to two identical drive boxes). The file servers are configured with “mirror” Storage Spaces, and on the respective disks a shared cluster storage (CSV) is organized. That is, the storage subsystem does not have a single point of failure at all, and it is built exclusively by means of Microsoft Windows Server 2012.

Data deduplication

Practically standard is a situation when each employee of the enterprise on a file server is allocated a personal folder. As a rule, the file-server disk is organized on a fault-tolerant RAID-array, regular backup is performed, only the employee has access rights to the personal folder. Thus, both information and interests of the enterprise are effectively protected.

Sometimes there are variations of the scheme: by means of Windows operating system the folders “My Documents” and “Desktop” are redirected to the file-server. That is, the employee uses these folders as usual, and physically they are located on the file server.

Now let’s imagine a situation when a certain file appears in a shared folder that is of interest to several employees. For example, the presentation of a new product. Or photos from a corporate event. Quite soon multiple copies of these files will be spread over personal folders. Of course, each copy of the file takes up space on the file server.

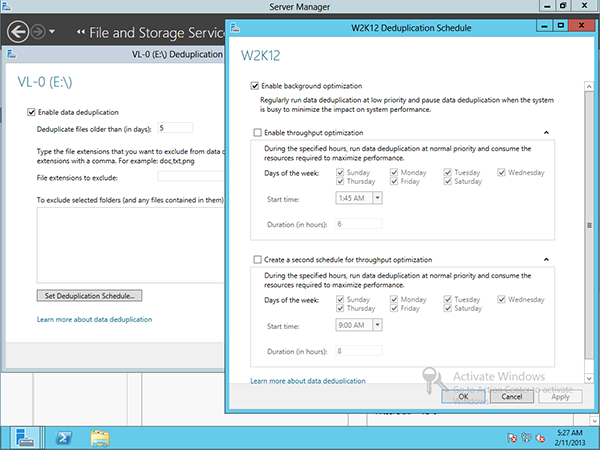

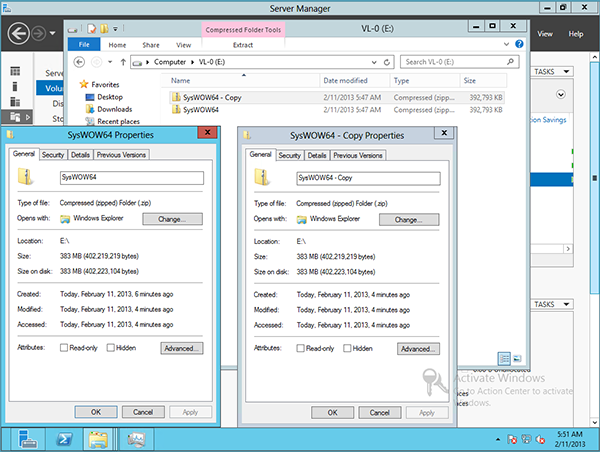

In such a situation, a new mechanism of Windows Server 2012 – data deduplication – may appear.

When activated for a specific volume, Windows Server 2012 starts to analyze the volume for blocks (not files, but blocks) that contain identical data and store it in a single copy on a schedule. Of course, this is completely invisible to the user.

By placing two identical files on disk, we get the following picture before and after deduplication:

The deduplication mechanism does not support ReFS and EFS protected data; files less than 32 KB and files with extended attributes, Cluster Shared Volumes and system volumes are not processed.

New file system – ReFS

The new file system is only available in Windows Server 2012. Although it remains NTFS compatible at the API level, there are a number of limitations:

- NTFS encryption is not supported;

- NTFS compression is not supported;

- it is not supported on system volumes.

ReFS uses a so-called “binary tree” structure to store information about files, which allows you to quickly find the information you need. It does not use the NTFS “journaling” mechanism, but implements a different principle of transactionality. ReFS stores extended information about the files, such as checksums with the ability to correct read the original data of the file, which helps prevent errors like “bit rotting”.

CHKDSK

The CHKDSK utility, which is responsible for checking the logical integrity of the file system and previously required exclusive access to the disk (to mount a volume), in Windows Server 2012 learned to work in the background. That is, for example, if a large disk with SQL Server data needs to check CHKDSK, then after rebooting the server starts, SQL starts and starts serving clients, and in the background runs CHKDSK.

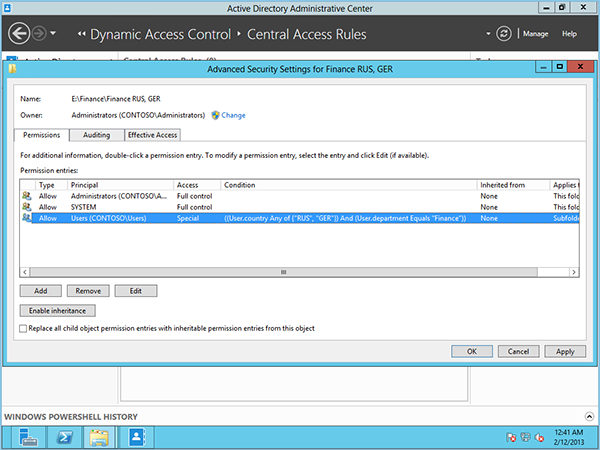

Dynamic Access Control

In previous generations of Windows Server, the division of access to file server resources was based on the mechanism of access control lists (ACL) on resources and inclusion of the user account in groups.

With a large number of file servers, resources on them and a large number of users, the work of administering access became time-consuming. Let’s imagine a situation where there are:

- several sales regions;

- several levels of access to information within sales divisions;

- several types of documents in terms of confidentiality;

- possibility of access from several categories of devices: trusted and untrusted.

The design’s getting tricky. For user accounts there are groups of the “G-Sales-RUS-High_Clearance-…” type. It is not possible to take into account the requirements of p.4 at all. At the same time we should note that we duplicate our work in some part: if we already correctly fill in “Region” for a user account in Active Directory, then we ourselves should include his account in the group “G-Sales-RUS-…”.

In such scenarios the field for dynamic access control activity appears. The idea is that a file server when making a decision about granting access to a resource (file, folder) will be able to take into account certain attributes of a user and device, the source of which is Active Directory. For example, grant access only if User Account Country = Russia or Germany, Department = Finance, and Device Type_ from which the user is trying to access = Managed.

That is, for administrators, the task of bringing user accounts into groups in some scenarios can be greatly simplified. The user himself will come for access to the file server resource, already having a set of specific details taken from Active Directory. And on the resource, the values of these attributes can be gathered in logical expressions.

Deployment requires, among other things, at least a Windows Server 2003 forest functional level and at least one domain controller on Windows Server 2012, as well as a file server on Windows Server 2012. Customers can be Windows 7.

Scenarios where dynamic access control works in conjunction with a file classification infrastructure (FCI) can be particularly interesting. In this case, extended attributes such as “LevelAccess = ConfidentialInformation” can be set on files automatically depending on their content, and access can be configured, for example, only to the “G_Managers” group of the “Management Company” department.

RDP 8 / RemoteFX second generation

Interesting changes have occurred in the Remote Desktop Protocol – RDP – with RDP 8 output.

If you look at the history of the issue, we can see that in the early days of its existence, the RDP protocol transmitted commands of GDI – Windows GUI, which were executed (drawn) on the remote terminal.

Gradually, the protocol has evolved to include various types, methods and techniques of encoding and passing tasks for drawing.

In Windows Server 2008 R2, the concept of RemoteFX was introduced, where the ideology completely changed. With RemoteFX, the RDP server actually rendered everything itself, took a ready-made framebuffer, encoded with a single codec, and gave it to the client.

Now RemoteFX, if I may say so, of the second generation is included in RDP8. Now the framebuffer is analyzed, for different parts of the screen (graphics, static images, animation and video) different codecs are selected, the parts are encoded and given individually to the client. For images, progressive rendering is used, that is, the client will see the image in low resolution instantly, and the details will be loaded as quickly as it will allow bandwidth.

First generation RemoteFX only worked if the RDP host was deployed as a Hyper-V virtual machine and the system had a compatible video adapter implementing DirectX version 10. Now these requirements are gone: both the visual part and the USB device sniffing will work on an RDP host deployed directly on the hardware, without virtualization, and without a DirectX 10 video adapter. (USB device sniffing allows any USB device connected to the client terminal to work in the terminal session, such as a licensed USB dongle – sniffing is done at the USB protocol transfer level).

RDP8/RemoteFX2 has learned to adjust to the peculiarities of the communication channel using adaptive codecs. That is, when connected via LAN video will be played in excellent quality, but on a thin WAN-channel something will be visible 😉

RDP8 supports multi-touch and gestures, so, for example, on Microsoft Surface you can establish an RDP connection to the server and use x86 applications. The RDP client has a remote cursor that helps you get your fingers on the Desktop interface controls.

There is also a new API that allows applications to use bandwidth-aware codecs. For example, in a scenario where a user PC is deployed as a virtual machine (so-called VDI), a unified communications client operating in a terminal session and receiving audio and video streams from an RDP client can take advantage of RDP8. Currently used by the Lync 2013 client.

The RDP8 client with support for all new functionality is already available for Windows 7.

Server Core / Minimal Server Interface Modes

It’s over: Server Core mode in Windows Server 2012 is the main recommended mode to install and use Windows Server. The advantages of this approach have been known for a long time: smaller size on disk, less resource requirements (which is especially important in case of high density of virtual machines on the physical host), smaller attack and maintenance surface (the server starts to carry fewer patches). By the way, you can remove the WoW64 component from Server Core that allows you to execute 32-bit code, turning Windows Server into a real 100% pure 64-bit operating system 😉

However, in Windows Server 2012 you can switch between Server Core, Minimal Server Interface and Server with a GUI at any time after installation (during operation), so it is interesting to see a scenario of Server with a GUI installation, server configuration and subsequent transition to Server Core.

For additional disk space savings in Windows Server 2012, it is now possible, after installing and configuring the server, to completely delete the binary files of those roles and functionality that are left unused.

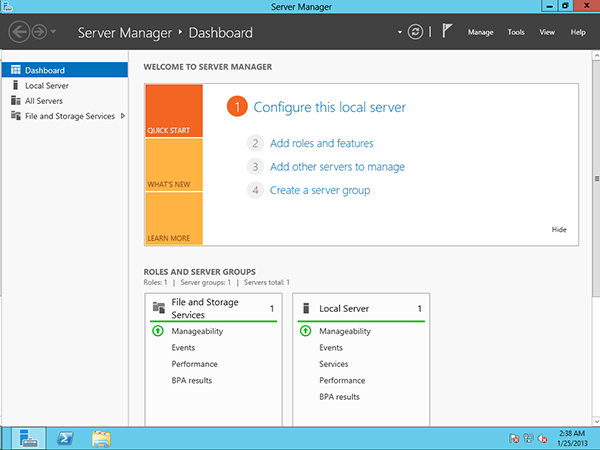

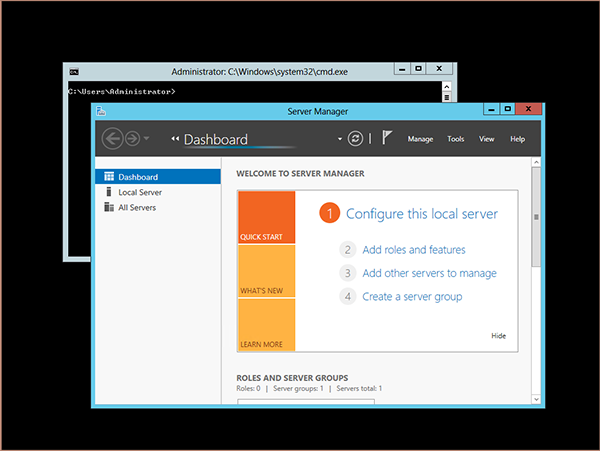

Administration – New Server Manager

Windows Server 2012 introduces a new Server Manager. The interesting thing is that you can manage several servers at once by performing a single operation on them.

A list of administration tasks has appeared in the interface. For example, after a DHCP server is installed, the administrator is assigned the task of configuring it – selecting scopes, etc.

Personal impressions: a pleasant surprise – perhaps, this is the first Server Manager, which was not immediately closed and which did not turn off the autoload option.

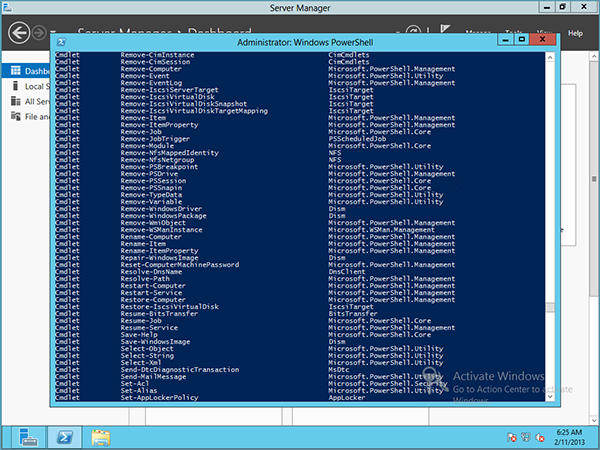

PowerShell

PowerShell in Windows Server 2012 has been greatly expanded: more than 2400 (!) commandlets have been added. PowerShell has de facto become the standard for command line management. All functionality – perhaps with very few exceptions – can be managed via PowerShell.

It’s an approach that makes you happy. We can remember the zoo from the complete command line utilities, Support Tools, Resource Kit, special utilities that Microsoft has written for itself, and among other things, each with its own syntax and the ability to share only through parsing the text output of one and feed to another.

Feel the difference: uniform syntax, object transfer between commandlets, remote management through a standardized WS-Management interface.

Interesting: some utilities, such as netsh, warn you that their functionality may be removed in future versions of Windows Server and advise you to migrate to PowerShell for management automation tasks.

Conclusion

Windows Server 2012 really has a lot of new and interesting functionality. The main changes are focused on virtualization, storage subsystems, networks. The moments that seemed to be the most interesting to the author are covered in this article.

However, something we haven’t even mentioned: for example, innovations in the printing subsystem, VDI, IIS, BranchCache, DHCP…

You can download a trial version of Windows Server 2012 here.

If you are interested in trying on the capabilities of Windows Server 2012 to your enterprise infrastructure – write, it will be interesting to communicate.

Introduction to Microsoft Windows Server 2012 R2

MORE NEWS

PreambleNoSql is not a replacement for SQL databases but is a valid alternative for many situations where standard SQL is not the best approach for...

PreambleMongoDB Conditional operators specify a condition to which the value of the document field shall correspond.Comparison Query Operators $eq...

5 Database management trends impacting database administrationIn the realm of database management systems, moreover half (52%) of your competitors feel...

The data type is defined as the type of data that any column or variable can store in MS SQL Server. What is the data type? When you create any table or...

PreambleMS SQL Server is a client-server architecture. MS SQL Server process starts with the client application sending a query.SQL Server accepts,...

First the basics: what is the master/slave?One database server (“master”) responds and can do anything. A lot of other database servers store copies of all...

PreambleAtom Hopper (based on Apache Abdera) for those who may not know is an open-source project sponsored by Rackspace. Today we will figure out how to...

PreambleMongoDB recently introduced its new aggregation structure. This structure provides a simpler solution for calculating aggregated values rather...

FlexibilityOne of the most advertised features of MongoDB is its flexibility. Flexibility, however, is a double-edged sword. More flexibility means more...

PreambleSQLShell is a cross-platform command-line tool for SQL, similar to psql for PostgreSQL or MySQL command-line tool for MySQL.Why use it?If you...

PreambleWriting an application on top of the framework on top of the driver on top of the database is a bit like a game on the phone: you say “insert...

PreambleOracle Coherence is a distributed cache that is functionally comparable with Memcached. In addition to the basic function of the API cache, it...

PreambleIBM pureXML, a proprietary XML database built on a relational mechanism (designed for puns) that offers both relational ( SQL / XML ) and...

What is PostgreSQL array? In PostgreSQL we can define a column as an array of valid data types. The data type can be built-in, custom or enumerated....

PreambleIf you are a Linux sysadmin or developer, there comes a time when you need to manage an Oracle database that can work in your environment.In this...

PreambleStarting with Microsoft SQL Server 2008, by default, the group of local administrators is no longer added to SQL Server administrators during the...